tags : Image Compression

Theory

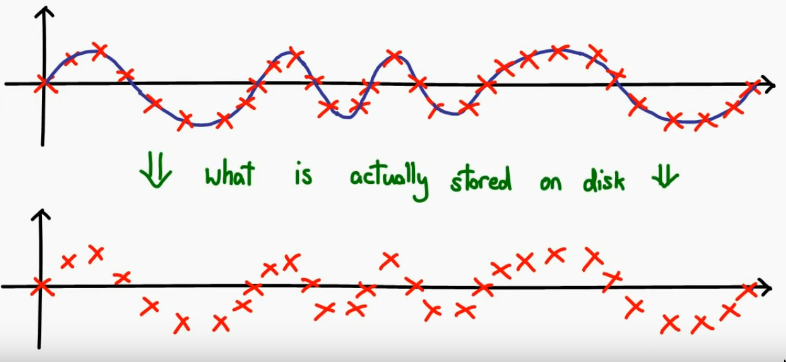

Sampling

- Axis

- Y axis: Displacement, is air moving backwards or forward

- X axis: Time

How to record sound?

- Record the movement of the air over the duration of time

- Analog, we can save the continuous waveform

- an analog audio signal is of an almost infinite

- Digital, we cannot save the digital waveform, we need to save it in discrete chunks. i.e “Sampling”

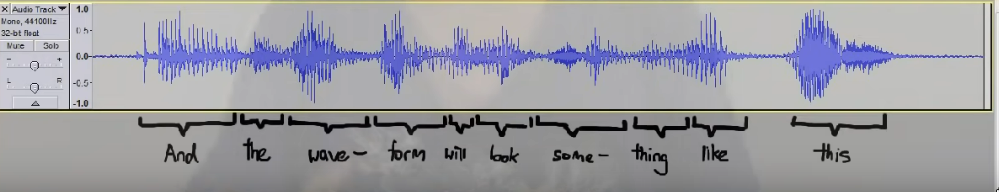

How does audio editors show waveform from discrete audio?

- They use some kind of interpolation technique to reconstructs the continuous waveform from discrete samples

- Eg. linear interpolation, cubic spline or polynomial interpolation.

- This is usually just used for editing and visualization, actual changes happen on the discrete samples.

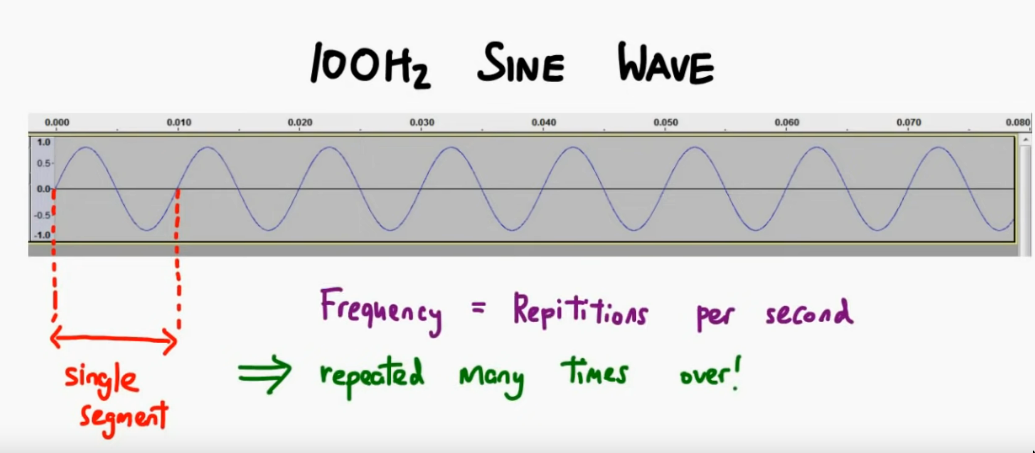

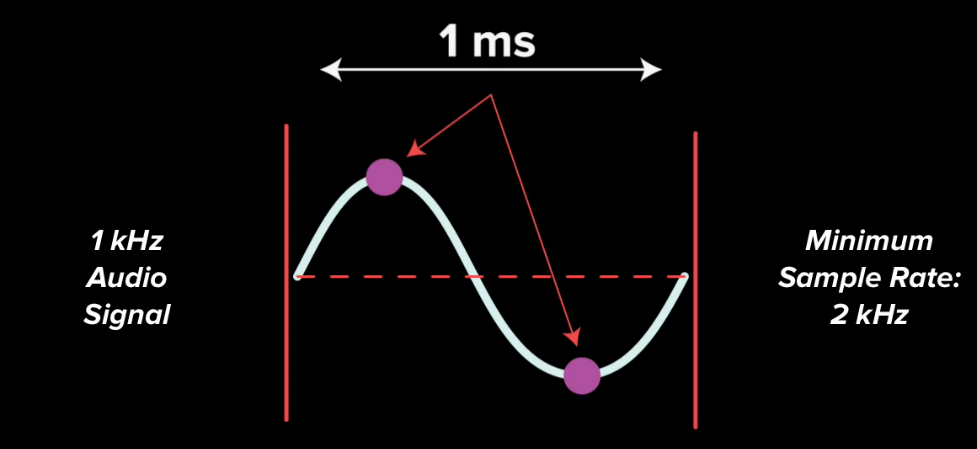

Frequency

- Eg. 100Hz sine Wave : The sine wave repeats itself 100 times in 1 second

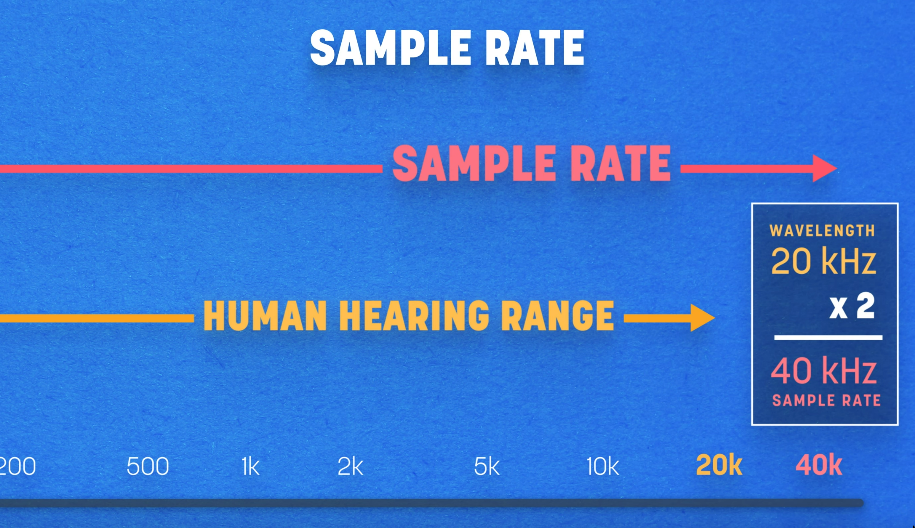

- Humans can hear at freq. range 20 Hz to 20 kHz. So according to Nyquist, we need to be sampling at 40kHz to be able to record feq. at 20kHz

Sampling rate

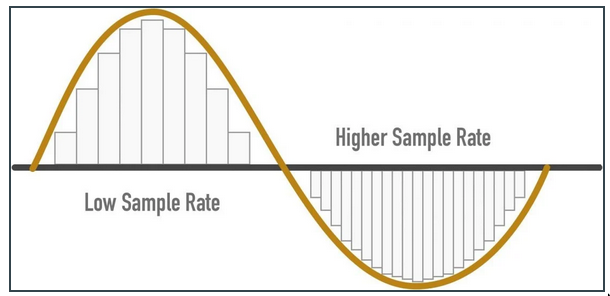

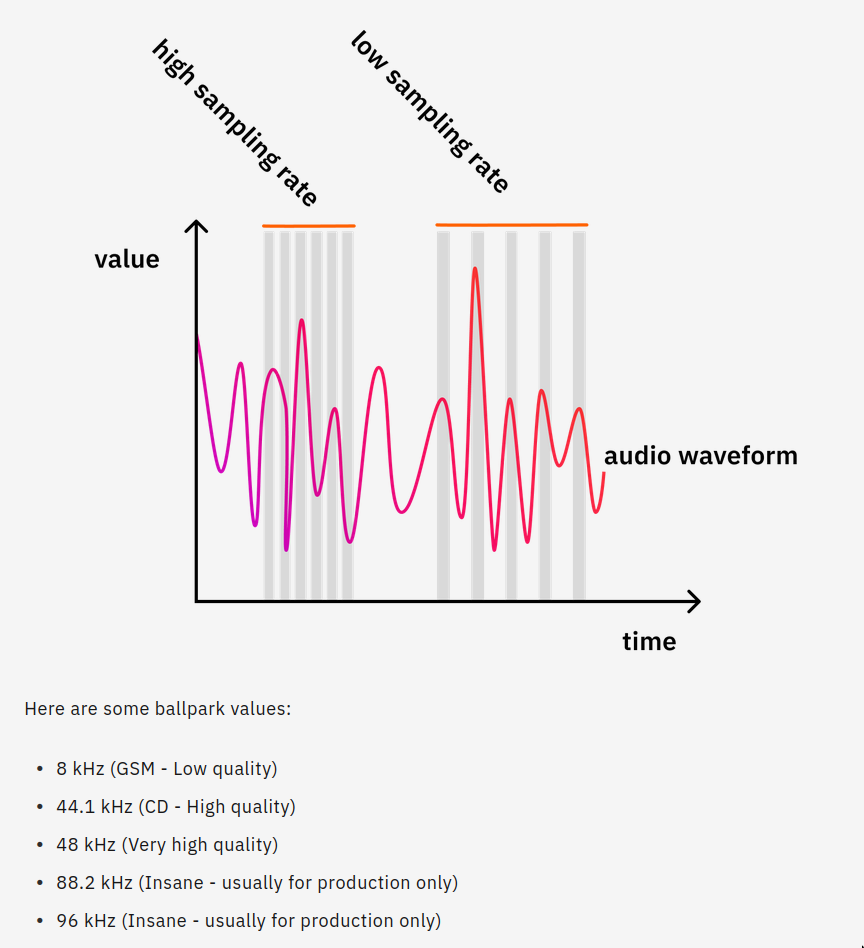

- High Sampling rate: More detailed, more granular, high storage

- Low Sampling rate: Less detailed, less granular, less storage

- Unit: Hz. Eg. 800 Hz, means analog signal was sampled 800 times per second

- 44.1kHz is common for auido, 48kHz is common for audio+video

-

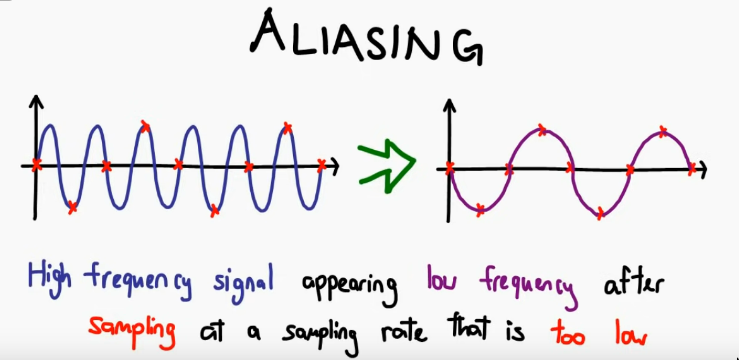

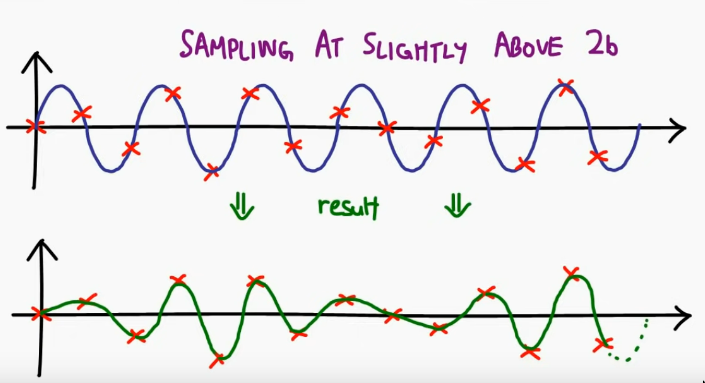

Aliasing

- If we use too low sampling rate, the resulting waveform looks nothing like the original waveform

- Solution to this is the Nyquist-Shannon Theorem

- If we use too low sampling rate, the resulting waveform looks nothing like the original waveform

-

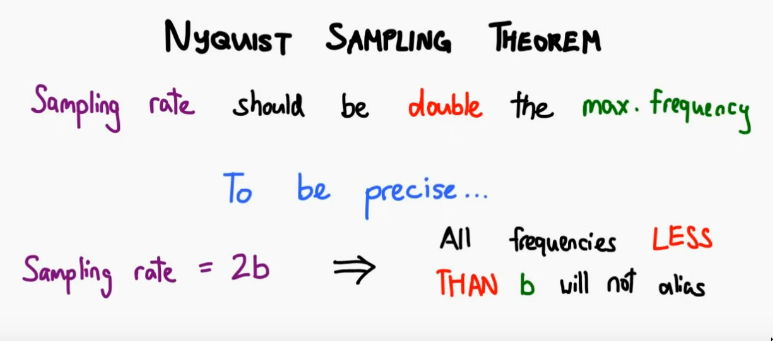

Nyquist-Shannon Theorem

- It also cannot be exactly double, so slightly double. So it’s not 40kHz but something like 44.1kHz

-

Nyquist Rate

Sampling rate required for a freq. to prevent alias

-

Nyquist Frequency

- Max. freq that will not alias given a sample rate

- Sampling rate => Double the max freq.

- Eg. for CD w sampling rate of 44.1 kHz, highest freq that can be accurately represented is 22.05 kHz

Bitrate and Bitdepth

Bitrate (throughput)

- Unit: kbps

- Higher bitrates: higher quality but also increased data transmission requirements

- Usually comes into play when transcoding/quantization etc.

- Represents the amount of data used to store or transmit one second of audio or video content

- Audio

- Can be by product of

sampling rateandbit depth - 24-bit audio w SR of 48 kHz: Higher bitrate

- 16-bit audio w SR of 48 kHz: Lower bitrate

- Can be by product of

- Video

- bitrate is the amount of data used to represent one second of video footage.

- Audio

- Can predict final size:

- Eg. 1m audio at 1441kbit/s

(1411 kbit / 8) kbyte * 60 second = 10582 kbyte = 10.33 mbyte - This calculation depends on the codec being used, which might use compression and such

- Eg. 1m audio at 1441kbit/s

- Ballpark numbers

- 13 kbits/s (GSM quality)

- 320 kbit/s (High-quality MP3)

- 1411 kbit/s (16bit WAV, CD quality, PCM)

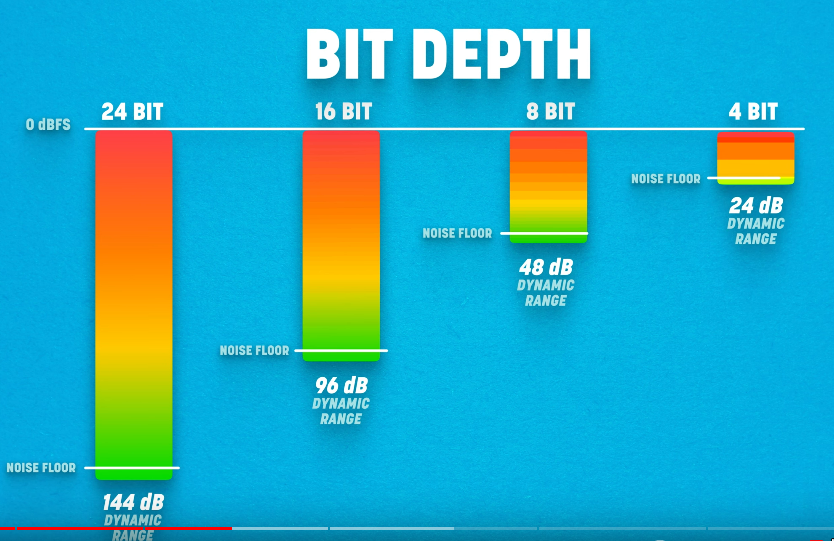

Bitdepth

- Amount of possible amplitude/level values

- More bitdepth = More accuracy

Audio/Video Engineering

Channels

- channels are just separate “recordings” or “streams” of audio signals.

- The nice things about channels is that create crazy experiences with it. Like in the same file you can have different multiple, separated audio streams can be in the same file.

- 1 : Mono

- 2 : left and right

- 2.1 : 2 for stereo and one for the LFE (“low-frequency effects” a.k.a.: “bass”)

- 5.1 : 2 front, 1 center, 2 rear etc.

Codecs

ffmpeg -codecsCodec basically does the compression here.

Video Codecs

- Standard codecs such as H.264 and H.265 good enough, focuses on small file sizes with good quality.

Audio codecs

- We usually don’t need so much of raw audio

- Our hearing feq is of certain range, we don’t need anything above 20kHz

- Presence of one frequency can affect your capability to detect a different frequency, if the codec knows that it can skip encoding that part etc.

- MP3, AAC, OGG: These are common lossy audio formats. See Compression and Image Compression

- PCM (e.g. in a WAV container), FLAC: These are lossless formats.

Containers

ffmpeg -formats- These are the “formats” (See Image Compression)

- Eg. A MOV container can contain an H.264 video stream and an AAC audio stream together.

- Eg. MOV, MP4, MKV, WebM, WAV (audio only) etc.

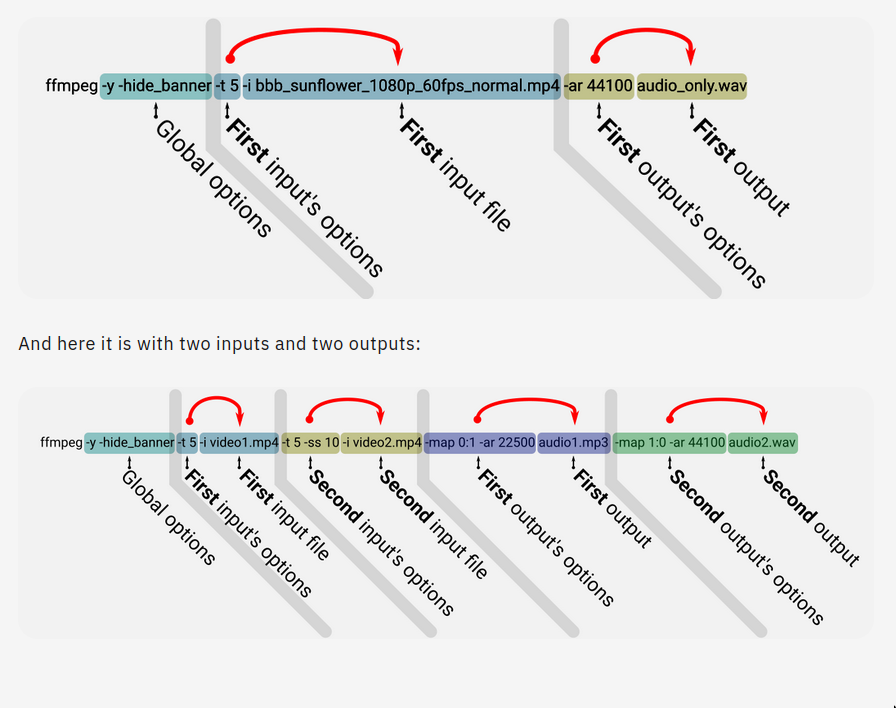

ffmpeg usage

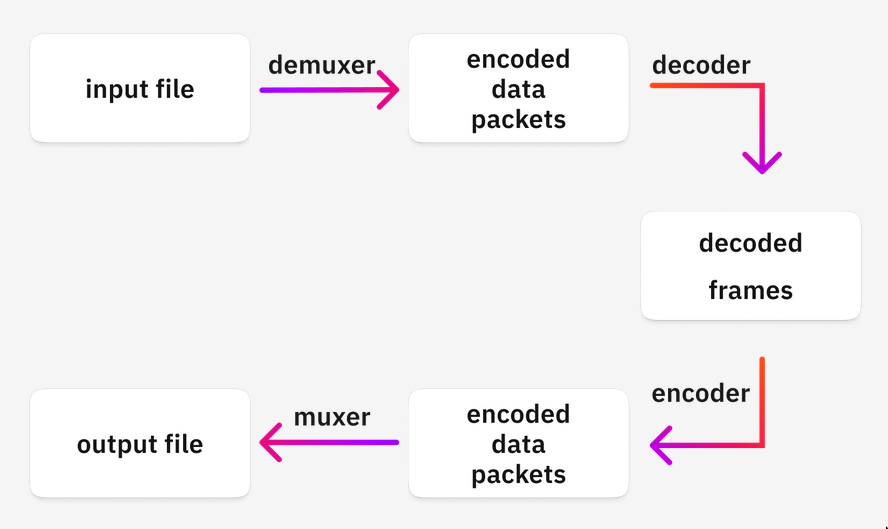

- In most cases, ffmpeg does all the processing on the decoded frames

Decoded stuff

- The most basic pixel format for video frames is called

rgb24.- Stores RGB values right after each other in 3x8(24) bits, which could hold 16m colors.

low memory address ----> high memory address |pixel|pixel|pixel|pixel|pixel|pixel|pixel|pixel|... |-----|-----|-----|-----|-----|-----|-----|-----|... |B|G|R|B|G|R|B|G|R|B|G|R|B|G|R|B|G|R|B|G|R|B|G|R|...

- Stores RGB values right after each other in 3x8(24) bits, which could hold 16m colors.

Inputs

streamsare building blocks ofcontainers- Hierarchy: File → Container → Stream → Channels

- Containers (Eg. mov, mpeg)

- Streams

- Audio tracks

- Different languages

- Different channels (stereo, mono etc)

- Subtitle tracks

- EN

- RUS

- Video tracks

- OthersOutput

Mapping

- Mapping refers to the act of connecting input file streams with output file streams

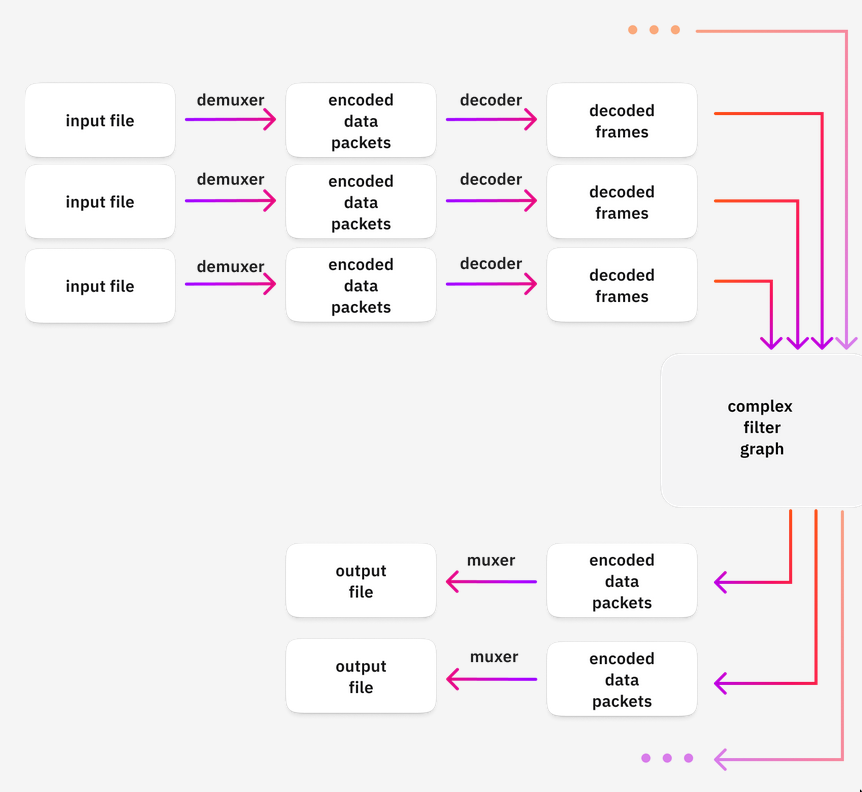

- Eg. 3 input files, 4 output files, must you also define what should go to where.

- It’s a parameter of output files

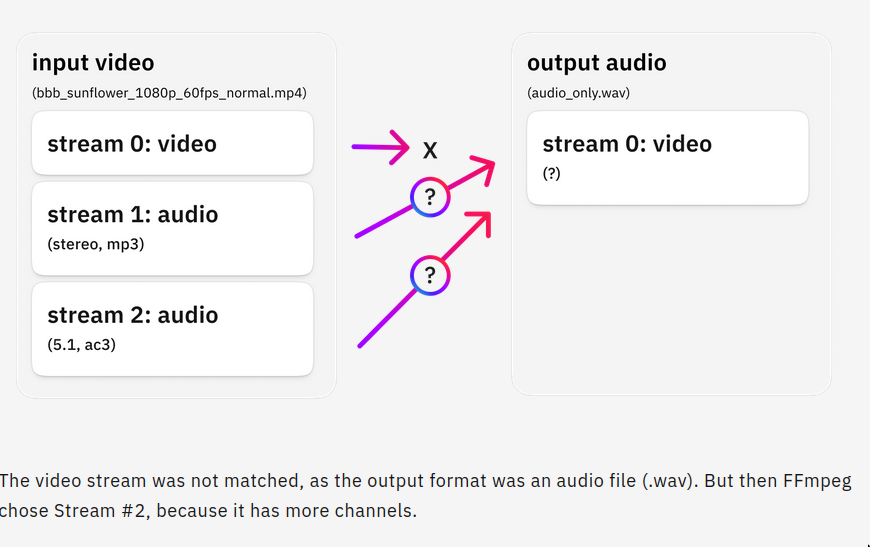

Automatic stream selection & Mapping

Filtering

- It’s sounds fancy but it’s ffmpeg term for modifying decoded frames (audio or video)

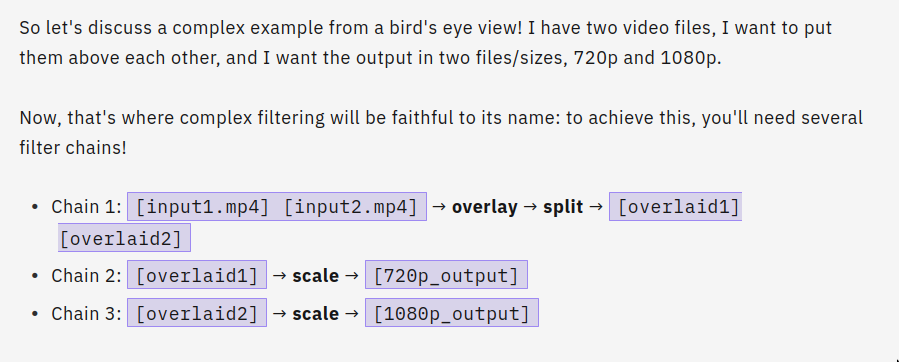

Chaining

- Rule: You can only consume a chain once

- That’s why we used split instead of the same input for chains 2 and 3

CLI