tags : MCP, Open Source LLMs (Transformers), Deploying ML applications (applied ML)

What? When? How?

- LLMs using tools, planning tasks, and executing multi-step processes

- Why Agent Engineering — swyx - YouTube

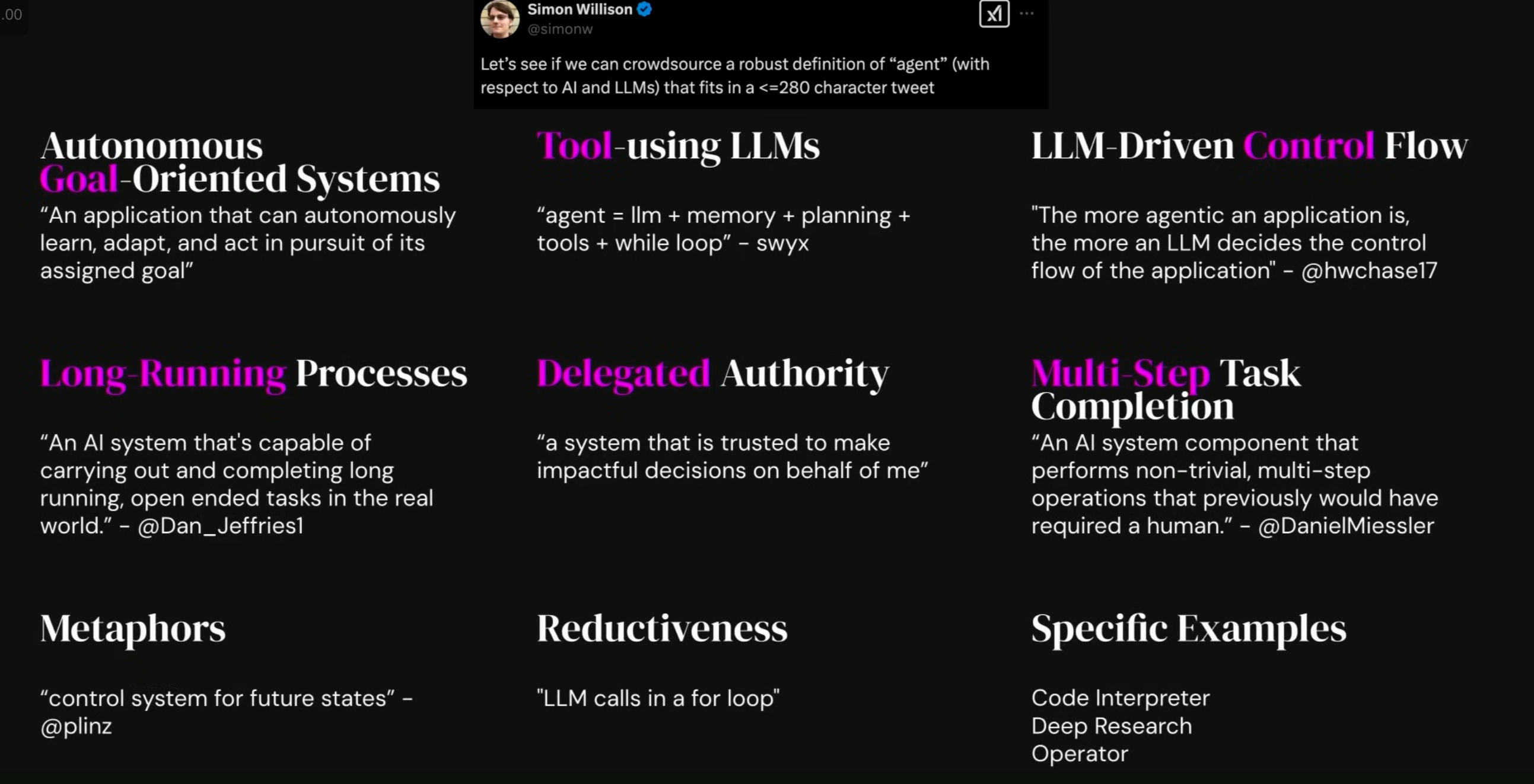

Definitions

- LLM on a loop

- They are fundamentally just programs with a loop structure. Their key characteristic is different modules making decisions about calling tools.

- Agents begin their work with either a command from, or interactive discussion with, the human user. Once the task is clear, agents plan and operate independently, potentially returning to the human for further information or judgement. During execution, it’s crucial for the agents to gain “ground truth” from the environment at each step (such as tool call results or code execution) to assess its progress. Agents can then pause for human feedback at checkpoints or when encountering blockers. The task often terminates upon completion, but it’s also common to include stopping conditions (such as a maximum number of iterations) to maintain control.

Patterns

Prompt chaining, e.g. generating a document and then translating it to a separate language as a second LLM callRouting, where an initial LLM call decides which model or call should be used next (sending easy tasks to Haiku and harder tasks to Sonnet, for example)Parallelization, where a task is broken up and run in parallel (e.g. image-to-text on multiple document pages at once) or processed by some kind of voting mechanismOrchestrator-workers, where a orchestrator triggers multiple LLM calls that are then synthesized together, for example running searches against multiple sources and combining the resultsEvaluator-optimizer, where one model checks the work of another in a loop

When?

- When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean

not building agentic systems at all. - Agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path.

- The LLM will potentially operate for many turns, and you must have some level of trust in its decision-making. Agents’ autonomy makes them ideal for scaling tasks in trusted environments.

- Do

NOTinvest in complex agent frameworks before you’ve exhausted your options using direct API access and simple code.

How?

- anthropic-cookbook/patterns/agents

- How To Build An Agent | Amp 🌟

- Stevens: a hackable AI assistant using a single SQLite table and a handful of cron jobs

Patterns

Just some ref. patterns can be used together.

- Sequential Processing (Chains)

- Steps run in a fixed order, each using the previous step’s output.

- Example: Generate marketing copy → Evaluate it(structured output) → Suggest improvements(conditional based on evaluation).

- Routing

- Branching based on outputs and tweaking model parameters dynamically.

- Example: Classify customer response as simple/complex → Use different models or adjust temperature based on complexity/creativity.

- Parallel Processing

- Run multiple steps simultaneously.

- Example: Summarize, extract keywords, and classify tone all at once.

- Orchestrator-Worker

- A central controller coordinates subtasks to achieve a larger goal.

- Example: Main task planner delegates to specialized sub-models for research, writing, and editing.

- Evaluator-Optimizer

- Loop through generate-evaluate cycles until quality criteria are met (up to a max iteration).

- Example: Refine a draft until a separate evaluation model scores it as acceptable.

- Multi-Step Tool (

When number of steps is unknown)- Iteratively use tools to solve a problem, stopping when:

- No further tool is called, or

- A max step limit is reached.

- Example: Loop through available tools until an answer is found or max steps are hit.

- Iteratively use tools to solve a problem, stopping when:

Agent Frameworks

Everyone is coming up w their own agent framework

| Link | Description |

|---|---|

| AWS Multi-Agent Orchestrator | A flexible framework for managing multiple AI agents, handling complex conversations, intelligently routing queries, and maintaining context. |

| ai16z/eliza | An open-source framework for creating, deploying, and managing versatile AI agents (elizas) capable of interacting across various platforms |

| Microsoft AutoGen | An open-source framework for building AI agent systems, simplifying the creation and orchestration of event-driven, multi-agent applications. |

| LangChain LangGraph | A stateful, low-level orchestration library built on LangChain for creating controllable agent workflows, especially cyclical graphs for agent runtimes. |

| HuggingFace smolagents | A simple, lightweight library (~1k lines of code) for building AI agents that write their actions in code, supporting various LLMs and Hub integration. |

| Langroid | An intuitive, lightweight Python framework using a multi-agent programming paradigm where agents collaborate by exchanging messages. |

| PydanticAI | Python agent framework from pydantic team |

| atomic-agents | designed around the concept of atomicity, built on top of instructor and pydantic |