tags : AWS, Filesystems, Distributed Filesystems, Storage, Storage Engines

FAQ

S3 presigned URL

It’s a AWS s3 concept but s3 based api store provders like R2 provide support for it(limited)

- Can be used for both uploading and downloading.

- Does not work for multiple files because s3 itself doesnt work w multiple files at a time

- pre-signed URLs also allow you to set conditions such as file size limit, type of file, file name etc to ensure malicious party isn’t uploading a massive 1 TB file into your bucket which you serve as profile pics. However, while amazon S3 supports these “conditions”, others like backblaze implementing S3 may or may not implement it. So beware.

- Note that limiting content length only works with

createPresignedPost, not withgetSignedUrl. - python 3.x - AWS S3 generate_presigned_url vs generate_presigned_post for uploading files - Stack Overflow

- https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/s3/client/generate_presigned_post.html#S3.Client.generate_presigned_post

- https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/s3/client/generate_presigned_url.html#S3.Client.generate_presigned_url

- PUTS vs POST: https://advancedweb.hu/differences-between-put-and-post-s3-signed-urls/

- Note that limiting content length only works with

- Some practices

- Files uploaded to S3 are quarantined with tags until we validate the binary contents appears to match the signature for a given extension/mime-type with a Lambda.

- If we want to resize we can use ImageMagik(see Image Compression) or use https://github.com/libvips/libvips. Which can be done separately but it’s an OK practice for user uploads to go directly to s3 instead of our backend.

Generation of e2 presigned URLs

Generation of the signed URL is static, NO CONNECTION WITH R2 is made, whatever is generating the presigned URL just needs to have the correct access creds so that the signed URL can be signed correctly. (Verify)

“Presigned URLs are generated with no communication with R2 and must be generated by an application with access to your R2 bucket’s credentials.” all that’s required is the secret + an implementation of the signing algorithm, so you can generate them anywhere.

- Presigned URLs are sort of security tradeoff.

- There are also examples on the interwebs which suggest sending out direct AWS credentials as a security

- They’re specific to one resource, if someone tries to alter the identifiers of the generated URL they’ll usually get a signature mismatch error from S3.

Gotchas

- Another thing that bit me in the past is that if I created a pre-signed URL using temp creds, then the URL expires when the creds expire.

- The most important thing about presigned URLs is that they authenticate you, but they do not authorize you.

Where are presigned URLs generated? (No network requests!)

- Generating Presigned URLs don’t need us to make a make a network request to the object store.

- But you do need the credentials!

- So, formula: credentials + signing algo + filepath = presigned url. (i.e there’s no network request involved)

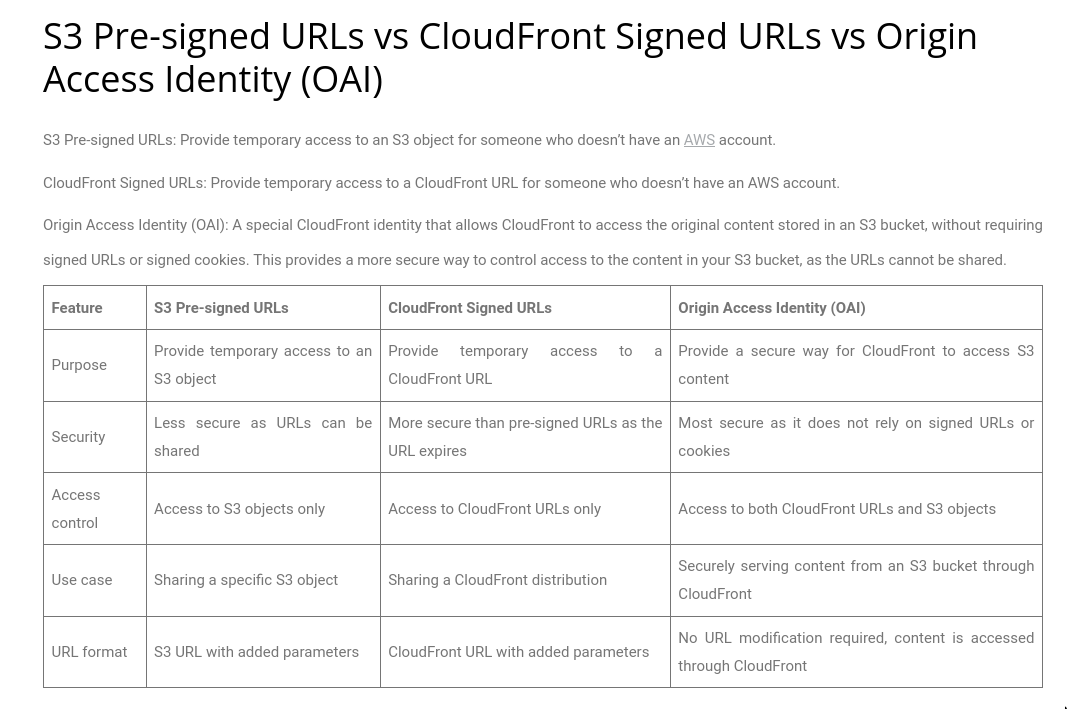

S3 signed URL vs Cloudfront signed URL

S3hasPresigned URLsCloudfronthasSigned URLs / Signed Cookies- AWS back at its bs

- It has signed urls for both foundfront and s3 and they are not the same and usecase of one over another is little blurry on the face of it

- c# - What is difference between Pre-Signed Url and Signed Url? - Stack Overflow

- https://liveroomlk.medium.com/cloudfront-signed-urls-cookies-and-s3-presigned-urls-be850c34f9ce

- Using CloudFront as a Lightweight Proxy - Speedrun

State as of 2024

Consistency in object stores

Concurrent Writes

Eg. Backups

- You have backups on S3, backups are done from multiple locations, lot of duplicate files floating around in the bucket

- You need to design a system which will

- Deduplicate these backups to save some sweet space/disk/gandhi.

- Expire data which was modified X days ago

- Concurrency issue with these 2 cases:

De-duplication: If we run 2 instances of de-duplication program and it finds the other duplicate and decide to delete the former, we’ll end up with all variants of the duplicate deleted!Old data expiry: If there is a race condition where we are appending new data to some object in s3 and at the same time we are deleting that file because it was expired when we first checked but it’s getting updated now! So in this case, the new data will go for that file will go missing as the file itself will be deleted.

- Solution: External Locks(eg. dynamodb), CAS. (CAS is what is being supported by other object stores but not s3)

CAS

- In object store speak, CAS is sometimes mentioned as “precondition”

- It guarantees that:

no other thread wrote to the memory location in between the "compare" and the "swap" - See Lockfree and Compare-And-Swap Loops (CAS): CAS allows you to implement a

Lock-freesystem- Guaranteed system-wide progress (FORWARD PROGRESS GURANTEE)

- Some operation can be blocked on specific parts but rest of the system continiues to work without stall

- CAS is about supporting

atomic renames

- CAS is supported by other object stores via HTTP headers

- GCS:

x-goog-if-generation-matchheader - R2:

cf-copy-destination-if-none-match: *

- GCS:

**

S3

- Hacking misconfigured AWS S3 buckets: A complete guide | Hacker News

- S3 is showing its age | Hacker News

- Things you wish you didn’t need to know about S3 | Hacker News

Since they do promise “read-after-write consistency for PUTS of new objects in your S3 bucket [but only] eventual consistency for overwrite PUTS and DELETES”,

Consistency Model of S3 (Strong consistency)

-

Initially S3 was Eventual Consistent but later around 2020/2021 strong consistency was added.

-

It’s similar to Causal consistency - Wikiwand

“So this means that the “system” that contains the witness(es) is a single point of truth and failure (otherwise we would lose consistency again), but because it does not have to store a lot of information, it can be kept in-memory and can be exchanged quickly in case of failure.

Or in other words: minimize the amount of information that is strictly necessary to keep a system consistent and then make that part its own in-memory and quickly failover-able system which is then the bar for the HA component.”

-

-

From eaton

When people say “strong consistency” they tend to mean linearizable. When AWS S3 says “strong consistency”, they are actually giving you only causal consistency.

-

Strong Consistency

- What is Amazon S3? - Amazon Simple Storage Service

- All S3 GET, PUT, and LIST operations, as well as operations that change object tags, ACLs, or metadata, are now strongly consistent. What you write is what you will read, and the results of a LIST will be an accurate reflection of what’s in the bucket.

Last writer wins, no locking (Concurrent Writes)

From the docs:

- Amazon S3 does not support object locking for concurrent writers. If two PUT requests are simultaneously made to the same key, the request with the latest timestamp wins. If this is an issue, you must build an object-locking mechanism into your application.

- Updates are key-based. There is no way to make atomic updates across keys. For example, you cannot make the update of one key dependent on the update of another key unless you design this functionality into your application.

- After 2020, AWS S3’s consistency model many operations are strongly consistent, but concurrent operations on the same key are not.

- The S3 API doesn’t have the concurrency control primitives necessary to guarantee consistency in the face of concurrent writes.