tags : Infrastructure, Containers, Kubernetes, Terraform, Object Store (eg. S3)

FAQ

EBS what?

- EBS can be confusing

- Elastic Beanstalk (EB)

- Elastic Block Storage (EBS)

- Proper use is EB and EBS

Security services in AWS?

See Cloud cryptography demystified: Amazon Web Services | Trail of Bits Blog

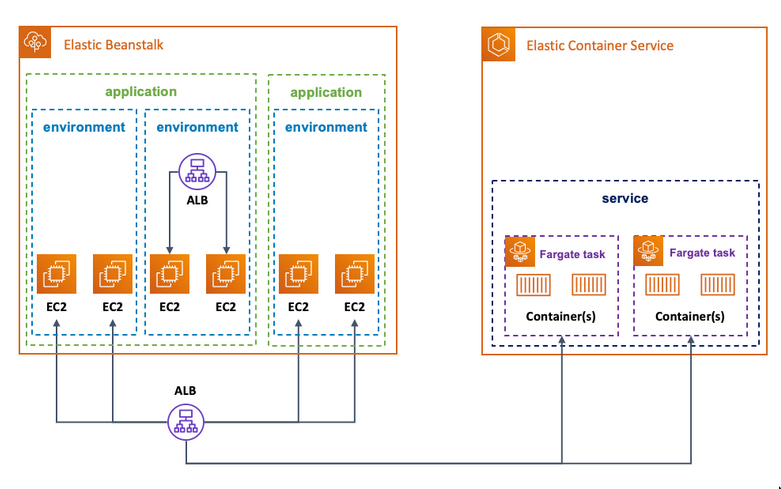

Deploying containers on AWS

- See Containers on AWS

- See 17 More Ways to Run Containers on AWS

- See Concurrency Compared: Lambda, App Runner, AWS Fargate

K8s on EC2 / EKS: Extremely robust, scalable, and need tight controls over your containers.Docker Swarm on EC2: Simple orchestration but still want to manage it.ECS on EC2: Ease of use and want access to your hostsECS on Fargate: Never think about anything other than the container- “ECS Service Connect introduces a new container (Service Connect proxy) to each new task upon initiation. Consequently, you are only billed for the CPU and memory allocated to this sidecar proxy container”

Plain EC2 with docker/docker-compose: This is where you manually install docker and docker-compose on EC2. You can do this but I’d prefer using ECS with EC2 instead.EC2+Beanstalk+Docker: Can be done but pretty cumbersome.docker on lightsaillightsail container serviceApprunner

Managed services?

Things that cost you vs things that don’t

| Thing | Costs? | Description |

|---|---|---|

| IGW | NO | |

| Cross AZ NAT traffic | Yes | |

| Cross AZ load balancing(ALB) | NO | |

| Cross AZ load balancing(NLB) | Yes | |

| Accessing public ALB from private subnet via NAT | Yes | |

| Accessing NAT gateway from different AZ | Yes |

Concepts

AMI

- Amazon Machine Image

- pre-configured virtual machine image, used to create and launch instances within Amazon Elastic Compute Cloud (EC2).

AMI with ENA

- https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/enhanced-networking-ena.html

- https://github.com/amzn/amzn-drivers/blob/master/kernel/linux/ena/RELEASENOTES.md

- https://stackoverflow.com/questions/54296949/ami-not-enabled-for-ena

- Seems like normal NixOS AMI doesn’t come with ENA enabled for some reason.

- After creating the AMI, we manually run

aws ec2 modify-instance-attribute --instance-id "i-07d9ab8e3d893da34" --ena-supporton the instance running “our custom ami” and then make an AMI out of that volume. This can be done via the ui/cli whatever.- Once that’s done this ami can be used to spin up enabled for ENA on boot.

Roles & Accounts & Users (IAM)

See An AWS IAM Security Tooling Reference | Hacker News

IAM Roles

- See IAM (Identity and Access Management)

- See AWS IAM Roles, a tale of unnecessary complexity | infosec.rodeo

- See Introduction to AWS IAM AssumeRole | by Rouble Malik | AWS in Plain English

- See IAM JSON policy elements reference - AWS Identity and Access Management

- See How IAM works - AWS Identity and Access Management

- Identity center and IAM relationship: See this

-

AssumeRole and Terraform

- See Will Terraform Delete my Existing Infrastructure on AWS when Apply?

- No

- Using AWS AssumeRole with the AWS Terraform Provider – HashiCorp Help Center

- Use AssumeRole to provision AWS resources across accounts | Terraform | HashiCorp Developer

- amazon web services - Execute Terraform apply with AWS assume role - Stack Overflow

- This is the way^ ❇

- Also see IAM tutorial: Delegate access across AWS accounts using IAM roles

- See Will Terraform Delete my Existing Infrastructure on AWS when Apply?

- ARN

More notes on IAM

-

On Roles in general

- When creating

iam role, we need to specify two policies- Trust policy: This usually includes

sts:AssumeRoleand thePrincipal(who can use this role) - identity policy: These are the permissions that the role gets to access different AWS

resources, eg. Access to S3, access to secrets manager etc.

- Trust policy: This usually includes

- When creating

-

On roles for application workloads

- Ideally

dev environmentandprod environmentwould be in two separate aws accounts in the same organization. (Preferably organized perOU, we have oneOUwe just have one product in houseware), in those cases we would have differentdev-workload-roleandprod-workload-roleasiam roleis account specific. - In our case, we’re not practicing multi-account setup. Our

dev envandprod envare in the same aws account but in different regions. In this case having separate roles fordevandproddoes not make much sense. So instead, we use the same role for managing workload fordevandprod. We just add all of the resources(dev and prod) combined into the resourceidentity policy. - The workload roles themselves may be shared among different workloads if they need similar permissions, else certain workload may have very specific policy for its role that it solely uses.

- Eg. Policy X grants s3 access. Policy X is applied to Role

WorkloadRole1 - Now workloads W1 & W2 need s3 access, they can assume the role

WorkloadRole1(share) - W3 is another workload, it has different aws resource requirements, it’ll probably need creation of a new specific policy and role.

- Eg. Policy X grants s3 access. Policy X is applied to Role

- Ideally

-

On roles for our human users (IAM Identity Center)

- Currently we’re using IAM users/IAM user groups instead of IAM Identity center users

- It’ll be super convenient to use

IAM Identity centerinstead for user management and delegating access etc. - But for now having

managed policies + user groupseems like a sane approach till we totally move to using Identity center - Identity center has several benefits

- If at some later point in time we do multi account aws setup for different deployment environments, since IAM users are account specific, they’ll have to login differently into each account. But If you use Identity center users, on top of user management being easier, the created user would have cross account access given they have the valid permissions.

-

Resources

- These resources are around moving from IAM user to IAM Identity Center user

- We planned on making this move but because it was not prioritized, we did not implement this

- https://repost.aws/questions/QUkC_9SFrHRwG0DMo9TXpaEA/iam-get-started-confusion-iam-user-vs-iam-identity-centre-user

- https://www.reddit.com/r/aws/comments/15i337j/i_chose_iam_identity_center_was_this_a_dumb_thing/

- https://www.reddit.com/r/aws/comments/17zekzt/aws_iam_identity_centre_vs_sts/

- https://www.reddit.com/r/aws/comments/16zubg5/aws_organizations_and_sso_should_iam_users_be/

- https://www.reddit.com/r/aws/comments/17wp0ro/nontech_founder_confused_about_aws_root_user_best/

- https://docs.aws.amazon.com/organizations/latest/userguide/orgs_introduction.html

- https://docs.aws.amazon.com/IAM/latest/UserGuide/root-user-best-practices.html#

- https://docs.aws.amazon.com/securityhub/latest/userguide/iam-controls.html#iam-4

- https://repost.aws/knowledge-center/create-sso-permission-set

- https://repost.aws/questions/QUkC_9SFrHRwG0DMo9TXpaEA/iam-get-started-confusion-iam-user-vs-iam-identity-centre-user

- https://www.reddit.com/r/aws/comments/15i337j/i_chose_iam_identity_center_was_this_a_dumb_thing/

- https://www.reddit.com/r/aws/comments/17zekzt/aws_iam_identity_centre_vs_sts/

- https://www.reddit.com/r/aws/comments/16zubg5/aws_organizations_and_sso_should_iam_users_be/

- https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_access-advisor-view-data.html

- https://repost.aws/knowledge-center/create-sso-permission-set

- https://repost.aws/questions/QUkC_9SFrHRwG0DMo9TXpaEA/iam-get-started-confusion-iam-user-vs-iam-identity-centre-user

- https://www.reddit.com/r/aws/comments/15i337j/i_chose_iam_identity_center_was_this_a_dumb_thing/

- https://www.reddit.com/r/aws/comments/17zekzt/aws_iam_identity_centre_vs_sts/

- https://www.reddit.com/r/aws/comments/16zubg5/aws_organizations_and_sso_should_iam_users_be/

- https://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies_access-advisor-view-data.html

-

More resources

Networking in AWS

AZ

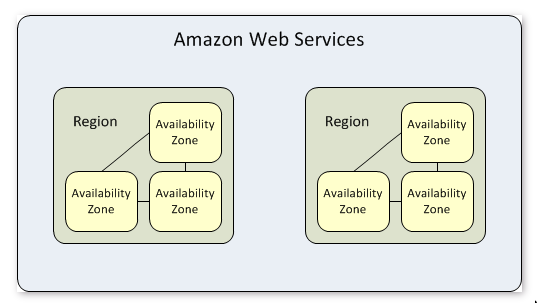

Regions vs Availability Zones

- Region:

us-east-1,us-east-2 - AZ:

us-east-1a,us-east-1b

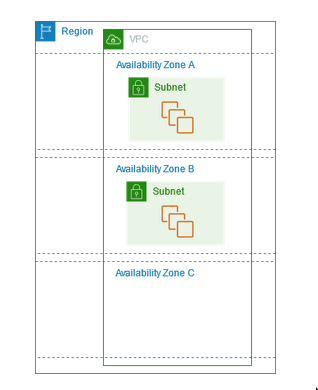

AZ and Subnets

1:N relation(1(AZ):N(subnets))- Each subnet must reside entirely within one Availability Zone and cannot span zones.

- Each AZ can have many subnets(public/private)

IP address assigning

- IP addressing for your VPCs and subnets - Amazon Virtual Private Cloud

- Everything has a private IP, when there’s a public IP for something. The public IP is mapped to the private IP of that instance.

- Can happen at a VPC level (??)

- Can happen at the subnet level

- This is what is confused me. Having this setting enabled doesn’t make a subnet public. There’s a different definion of a public subnet

- Can happen at the instance level

- Also can be assigned via ElasticIP

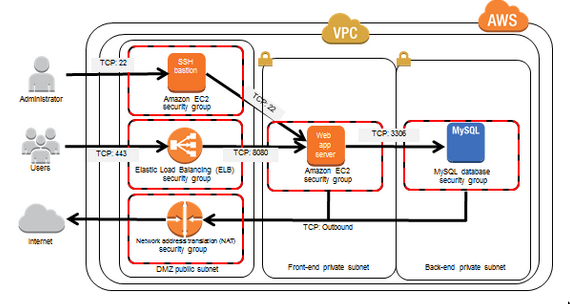

Subnets

Subnets in AWS(Cloud) vs Classical subnets

While the idea of subnet is the same but the reason why we create subnets in classical Networking and in AWS/cloud are different

- In on-prem, we use subnet gateways typically to perform filtering, broadcast traffic and fault isolation etc. (L2 involved)

- In could, there’s no L2. In AWS, each subnet can route to other subnets within the VPC range(s). We can filter using NACL and SG.

- Usecase in cloud of subnet are possibly the following

- Routing: If we need to separate traffic of IGW vs NGW vs VGW vs TGW etc. we need subnets as subnets will result in route tables

- HA: In AWS, subnets are related to AZ

- Traffic Filtering: If you want to use NACL, you’d need to create subnets

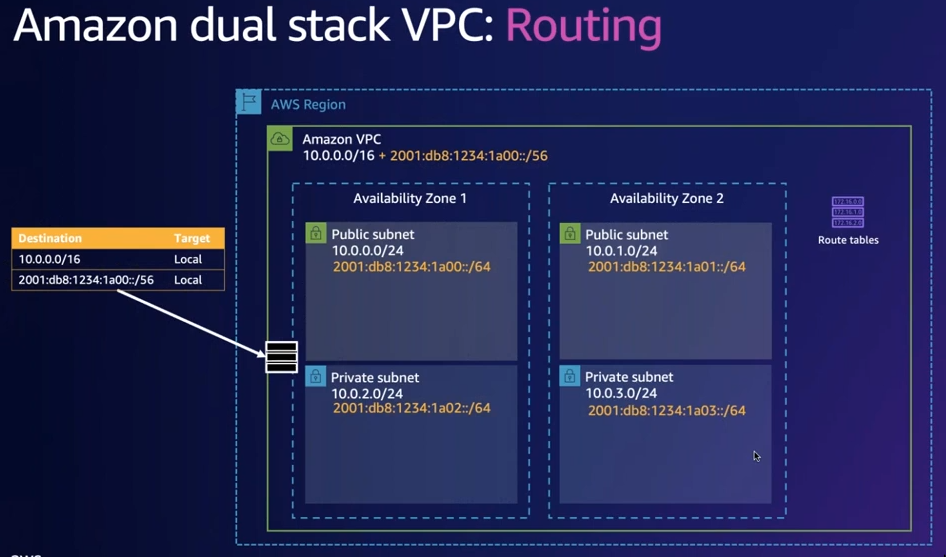

TODO Routing tables

- See Configure route tables to understand route-tables.

- There are different levels of route-tables

- Subnets have route table (Private and Public subnets have their own route table)

- Inter subnet communications

- Routing between subnets is handled internally within the VPC based on the routes defined in the associated route tables.

VPC

See Practical VPC Design (Also has notes about Security Groups)

- By default, when you create a VPC, a main route table is created.

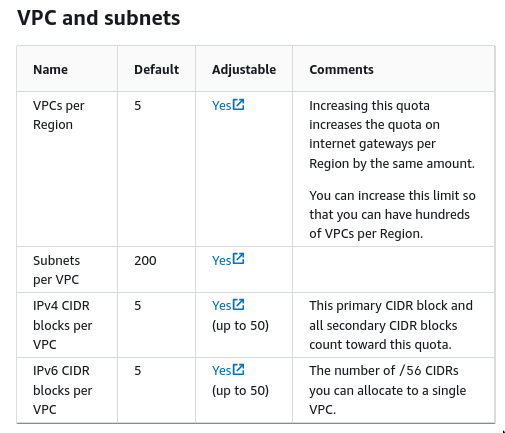

- Amazon VPC quotas

Subnets

-

Subnets and AZ

- Subnets exist in exactly one AZ

- CIDRs for each subnet in a VPC cannot overlap

-

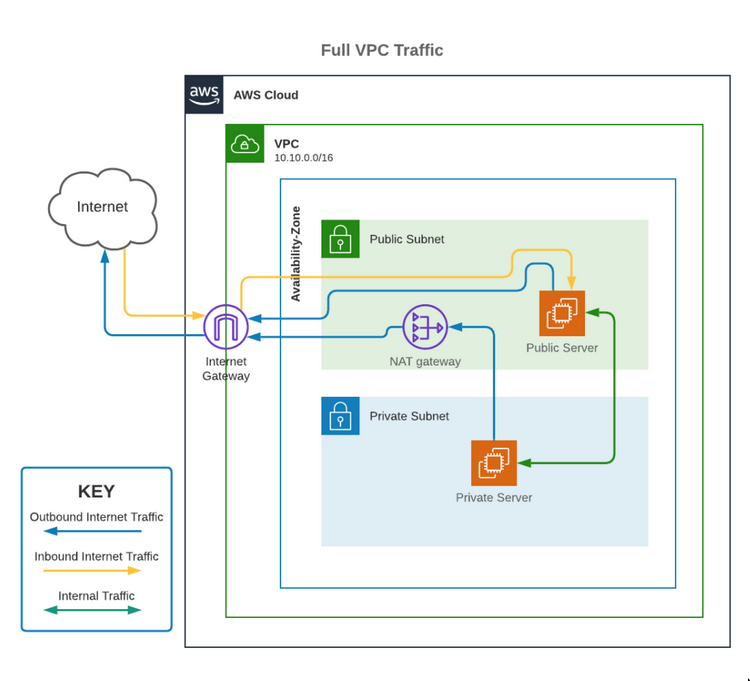

Public and Private

-

Public

- A subnet with the default route to the IGW

- IP assign

- Configure VPC to assign public addresses to instances

- Assign Elastic IP addresses to instances

-

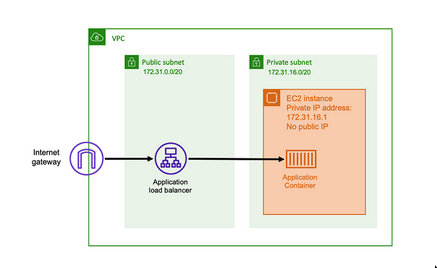

Private

- A subnet with NO default route to the IGW

- Instances in private subnet can’t communicate with the internet over the IGW

- Since instances in the private subnet will have private IPs, and IGW does not do NAT for private IPs, we just cannot do outbount/inbound. (But this is not the case w IPv6)

-

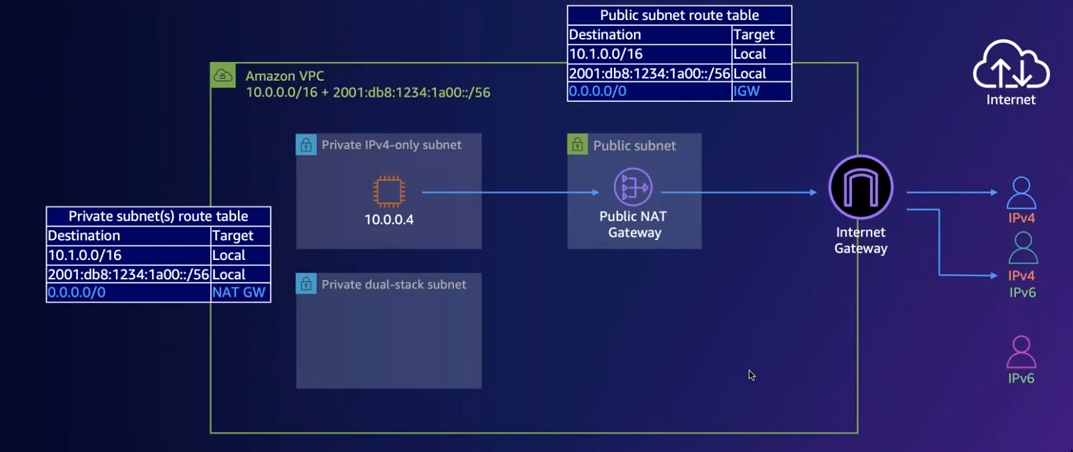

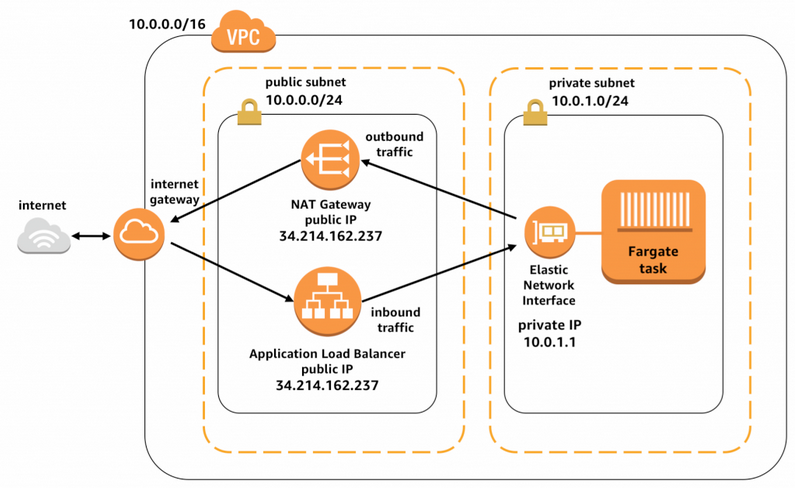

Internet access for instances in private subnet

- NAT instance/gateway in a public subnet

- 0.0.0.0/0 in private subnet points to the NAT Gateway

- NAT gateway points to IGW

-

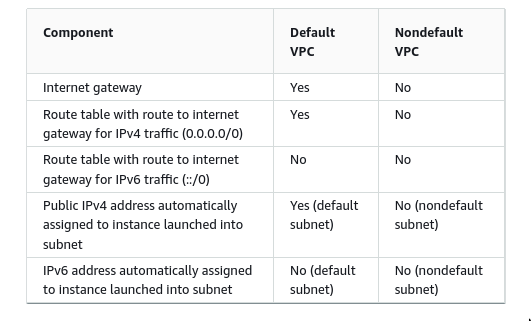

Default vs New VPC

- Default VPC has functional advantage

- A default VPC comes with a public subnet in each Availability Zone, an internet gateway, and settings to enable DNS resolution.

VPC and Gateways

- AWS Gateways are things that are related to a VPC, not to subnets

- VPC is just a LAN with local access until you stick an IGW on it.

- i.e a routing table entry allowing a subnet to access the IGW.

- Whether or not traffic uses IGW or NGW depends on subnet’s routing

- e.g. an instance w public EIP can go directly out the IGW

- e.g if we route

0.0.0.0/0to the NAT GW for that instance’s subnet, it would get NAT’ed to the NATGW.

DNS inside VPC

- Amazon DNS server

-

Private Hosted Zone

Access the resources in your VPC using custom DNS domain names, such as example.com, instead of using private IPv4 addresses or AWS-provided private DNS hostnames,

- Route 53 Private Hosted Zones across multiple Accounts : aws

- How to use Route53 for DNS resolution internally but Cloudflare externally? : aws

- https://community.cloudflare.com/t/delegating-sub-domains-to-aws-r53/8511

- domain name system - Cloudflare + AWS route 53 combined to handle records - Server Fault

- amazon web services - Can I use AWS route 53 and Cloudflare at the same time? - Stack Overflow

- Considerations when working with a private hosted zone - Amazon Route 53

VPC Peering, AWS Privatelink and VPC Endpoints

VPC Peeringis linking a VPC and is CIDR range to another so you need to be careful of ensuring different CIDR ranges.VPC Endpointsare a way of connecting to managed services, without going to the public internet and back again.- Usually you’d use AWS PrivateLink to implement VPC endpoints

AWS PrivateLinkis technology allowing you to privately (without Internet) access services in VPCs. These services can be your own, or provided by AWS.

Gateway

Internet gateway (IGW, Twoway, Inbount&Outbound)

- Internet gateway routes public IPs

IN/OUT->TO THE INTERNET - Does not do NAT for private subnets, for public subnet

- Logically provides the one-to-one NAT on behalf of your instance

- When traffic goes VPC to Internet, reply address is set to the public IPv4 address or EIP of instance

- When traffic comes Internet to EIP/PublicIPv4, destination address translated into the instance’s private IPv4 address before the traffic is delivered to the VPC.

- Two Way

- Allows resources within your public subnet to access the internet

- Allows the internet to access said resources in the public subnet.

NAT gateway (NGW, Oneway, Outbound)

- See NAT

- Translates private IPs to it’s public IP.

- You cannot associate a security group with a NAT gateway. You can associate security groups with your instances to control inbound and outbound traffic.

- A NAT gateway has to be defined in a public subnet

- Public NAT gateway is associated with One Elastic IP address which cannot be disassociated after its creation.

- A nat gateway still uses IGW to get to the Internet.

- One way

- private resources access the internet

-

Usecases

- Instances in private subnets talking to the internet

- Whitelisting IP: Making all outgoing traffic through a NAT Gateway(single IP) is useful when whitelisting IP addresses with external services. For this reason, it’s useful to have a EIP for NAT Gateway, Eg. If you ever need to replace your NAT instance, you don’t need to update the whitelist in other places because now you have a EIP :)

-

NAT gateway vs NAT instance

- See Compare NAT gateways and NAT instances

- See https://fck-nat.dev/stable/deploying/

- They both perform the same action

- NAT instance is like EC2, no HA, Cheaper and tough to scale.

- You run NAT software on an EC2 and managing it.

- NAT gateway is HA plus scalable, hence costly. It’s a managed AWS service. You can’t SSH to NAT gateway and it’s very stable.

- So in a way, NAT instance is self managed NAT gateway

-

Other notes

- Cross AZ is expensive

- So we want a NAT gateway per AZ, i.e per subnet i.e per public subnet

- If anything that always needs public internet access to operate, it’ll be probably be cheaper to put it in a public subnet with appropriate SG rules.

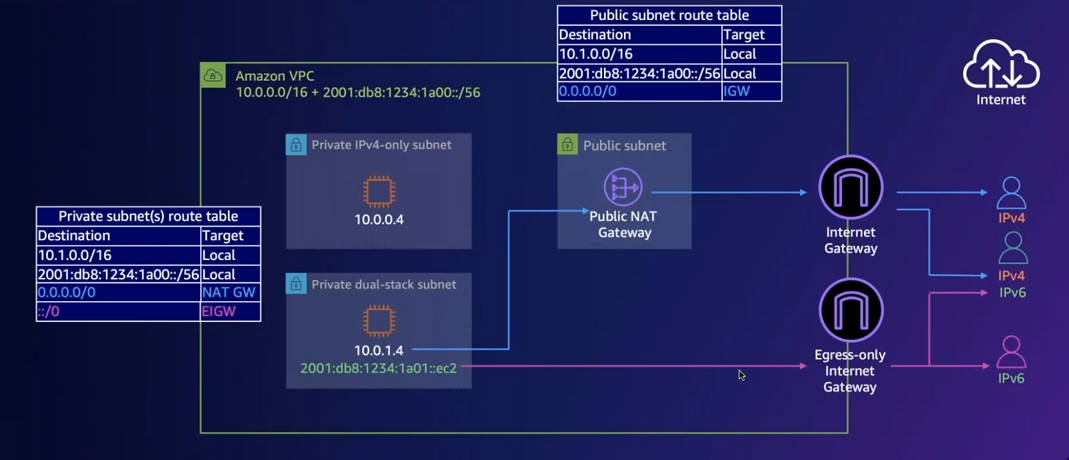

IPv6

Concepts/Services

-

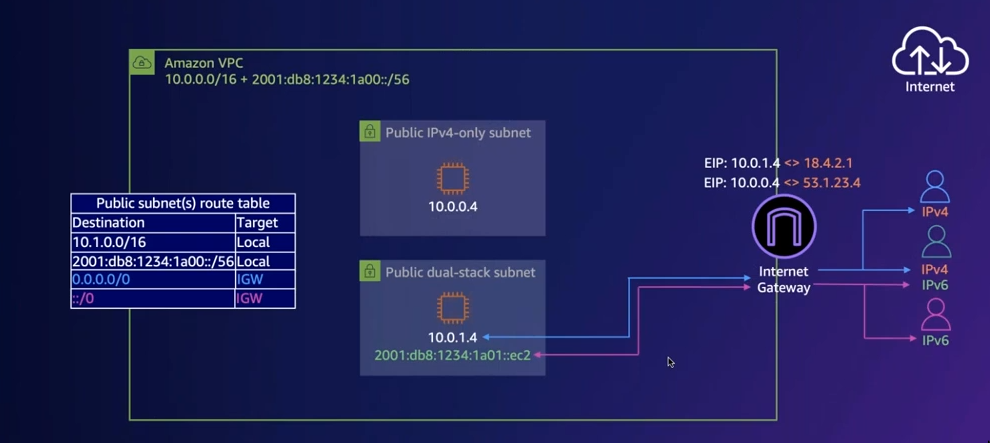

Dual stack

-

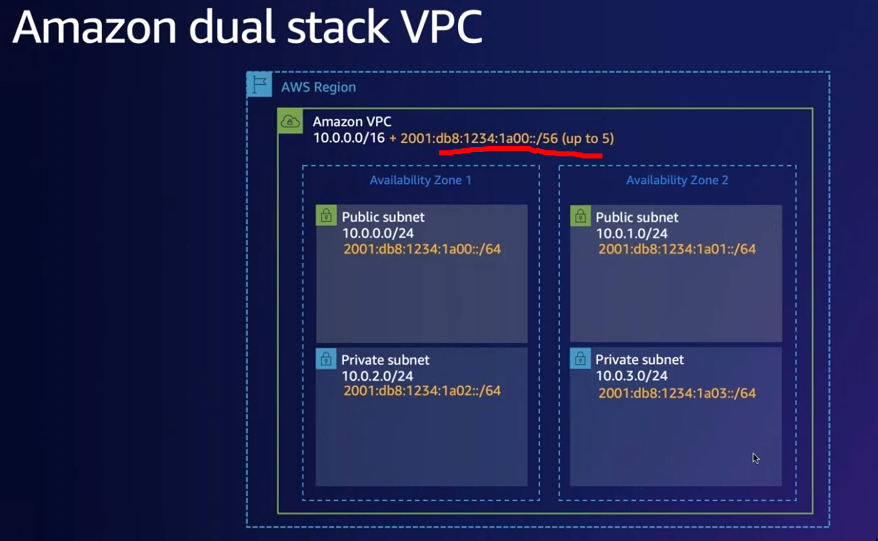

Dual stack VPC

If it has CIDR blocks for IPv4 and IPv6

-

IPv6 CIDR Block

- Can be dual stack or IPv4 only

-

IPv6 Routing

- VPC has routing table, subnets can talk to each other

-

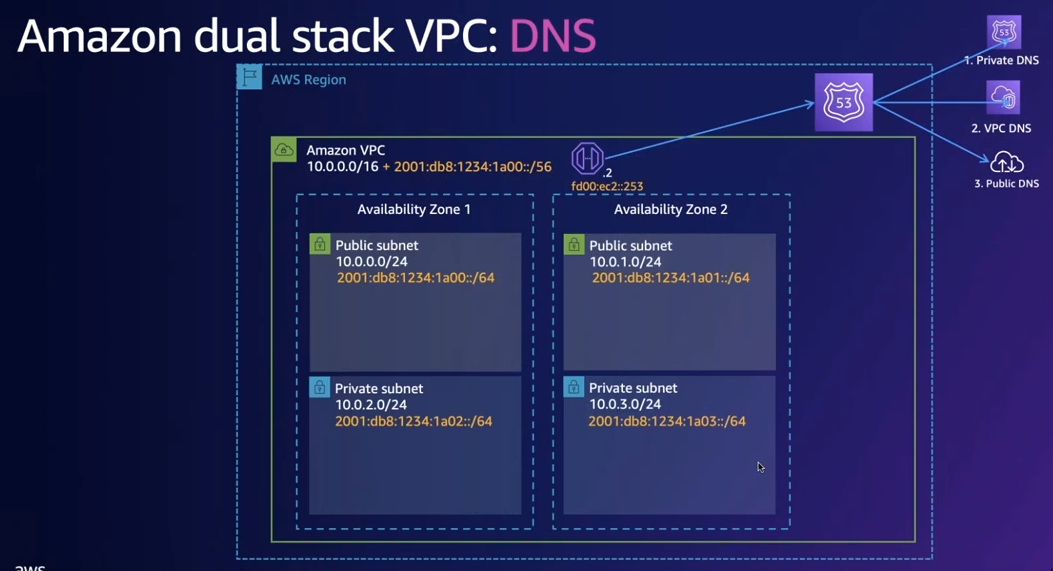

DNS

- Each VPC has its own DNS resolver(route53 resolver), tied to the region’s

Route53 - DNS resolver has both IPv4&IPv6 addresses

- Each EC2 instance can send 1024 packets per second per network interface to Route 53 Resolver (.2 address)

- Each VPC has its own DNS resolver(route53 resolver), tied to the region’s

-

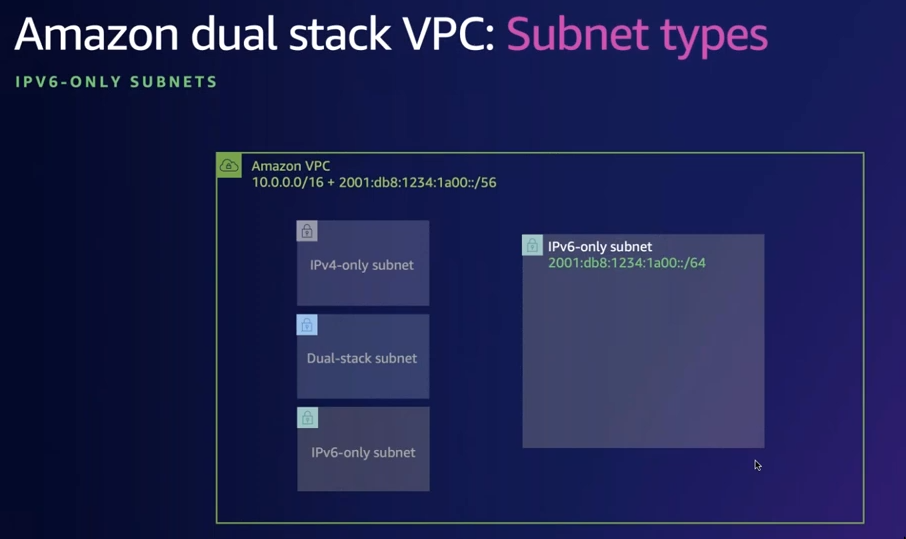

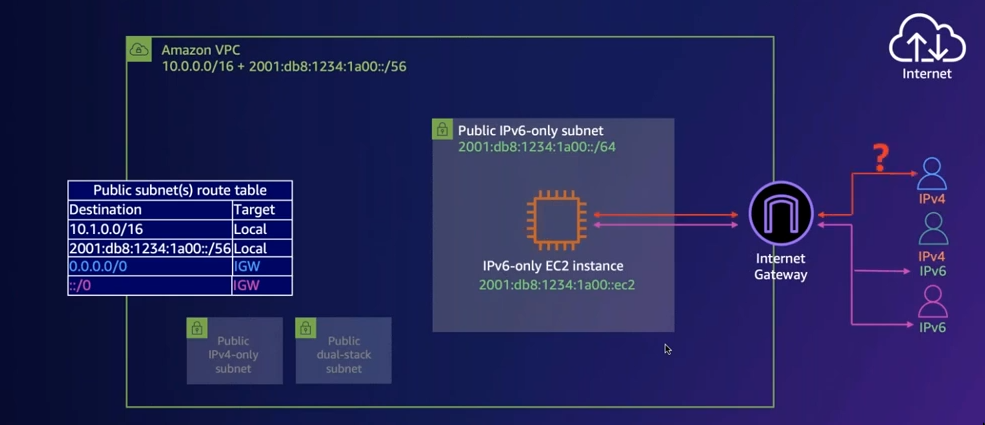

Subnet Types and traffic flow

- Dual stack (Eg. migrating existing subnet to IPv6)

- IPv4 only (Eg. existing subnet)

- IPv6 only

- All of these subnet types can co-exist in a VPC

-

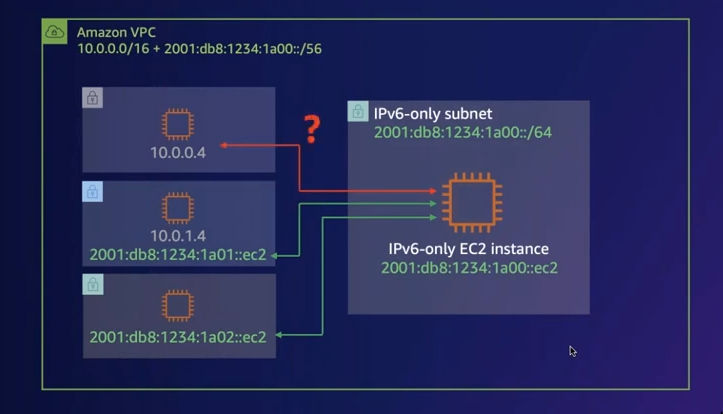

How will a IPv6 only EC2 instance talk to a IPv4 subnet?

- Do we run IPv4 & IPv6 in parallel? Backwards compatibility

-

- Dual stack ELB

-

-

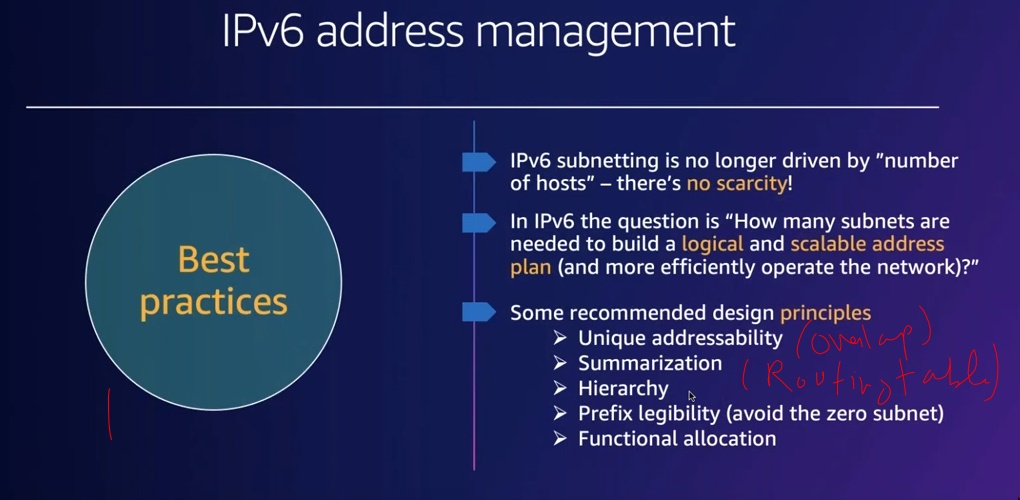

IP management

-

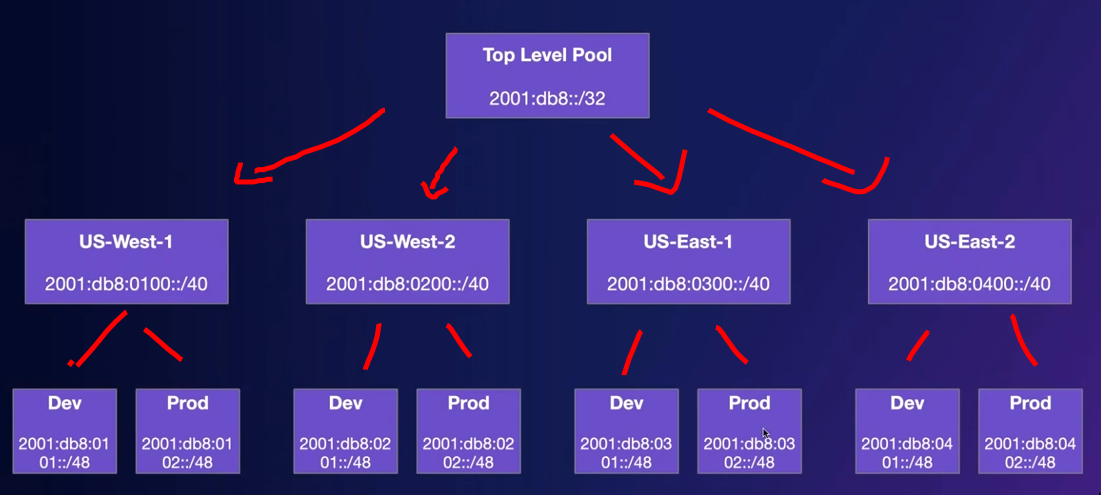

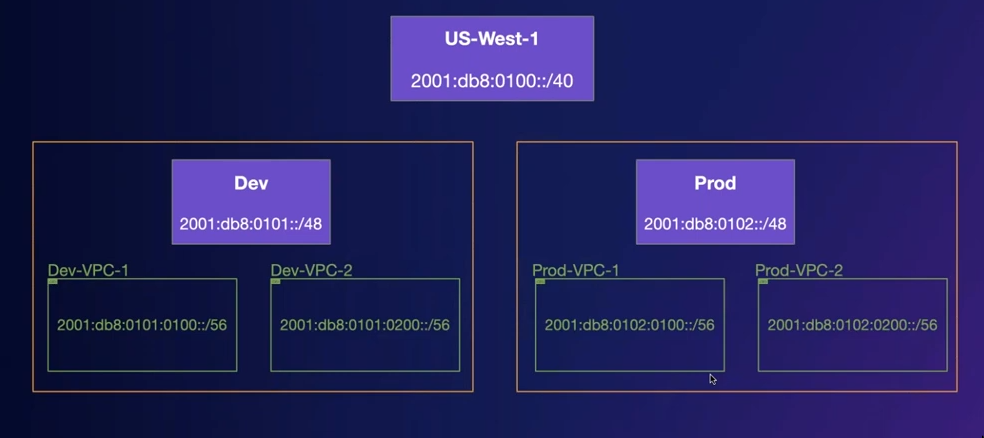

Hierarchy and Organization

We want things like easy summarization and good structure and hierarchy in our subnets.

We want things like easy summarization and good structure and hierarchy in our subnets.- Example CIDR hierarchy:

Top level pool > regions > routing domains/environments > VPCs > Subnets - AWS by default assigns us a random CIDR for IPv6. (From the Amazon pool). Instead we create our own pool so that we can manage things ourselves

- Eg. For each

regionI am going to assign this CIDR block and then from that block I’ll start allocating CIDRs to the VPCs.

- Eg. For each

- Example CIDR hierarchy:

-

IPAM

IPAM allows you to manage IP address across multiple accounts/regions

-

-

IPv6 IP assigning

/56: For VPC (Fixed length) (Number of vpc is limited, 5 non-overlapping VPC ranges.)/64: For Subnets inside the VPC (Fixed length) (Number of subnet is limited)- Network identifier and host identifier can be 64bit each

-

Internet Connectivity

-

IPv6 to IPv6

- IPv6 addresses with the EC2 instance in a public subnet in a VPC would be a global unicast address.

- So we would not need NAT there, It can directly talk throught the IGW.

-

TODO IPv6 to IPv4

- Using DNS64 and NAT64

- See IPv6 Addressing & Architecture: AWS - YouTube

-

IPv6 in a Private subnet

- The IPv6 address is globally unicast

- So if we put a instance which is in a subnet(which we consider private) but has a set the gateway to the internet gateway, we’re exposing it bi-directonal to the internet.

- Because unlike IPv4 private networks they can just use the internet without NAT.

- Solution is to use egress only IGW

-

Usecases

-

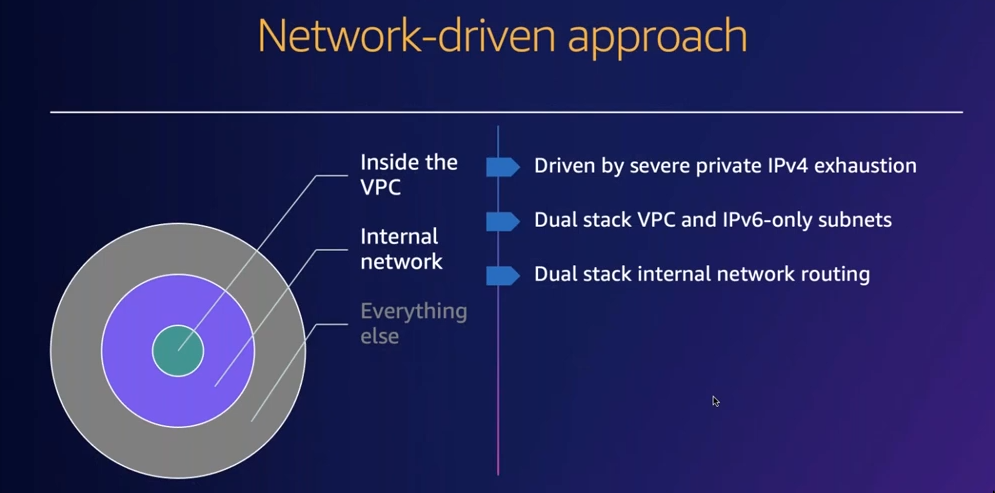

Network

- Aquition: oVerlapping ipv4 addresses!

- Solution

- Dual stack VPC & IPv6 only subnets

- Dual stack internal network routing

- We have VPC2VPC methods that allow us to access internal ipv6 network

- VPC2VPC

- AWS Transist Gateway

- Cloud1

- Hybrid connectivity w VPN

- Direct connect

- Solution

- Aquition: oVerlapping ipv4 addresses!

-

Services & Businesses

- Exposing services on

- On AWS network

- On Public internet

- Now the business wants to serve clients who are connecting to it using ipv6 (In places w greater ipv6 adoption)

- Dual stack VPC (Network level) + Dual stack ELB (Service level)

- Exposing services on

Operations

Load Balancers

AWS offerings

-

ELB / CLB (Not recommended)

- Elastic Load Balancers

- aka CLB (Classic LB)

- Did both L4 and L7 load balancing

- Lot of limitations, ALB and NLB are newer.

-

ALB (L7)

- Primary purpose of alb is to split traffic for the

same routebetweendifferent backends. - Also rule based routing. (AWS API Gateway(AGW) only does this)

- Primary purpose of alb is to split traffic for the

- NLB (L4)

reverse proxies vs Load Balancers?

Options are either of:

- NLB + nginx/traefik/caddy etc

- ALB + LB controller(if using Kubernetes)

Additionally:

- We can still use a reverse proxy + ALB, but that’s usually not necessary.

- For ECS anywhere an external load balancer may be useful.

Target Groups

-

Registering & Deregistering

- When registering, ensure that you have appropriate security groups that allow

LB-instance inboundcommunication. - When deregister a target, the load balancer waits until in-flight requests have completed.

- When registering, ensure that you have appropriate security groups that allow

-

Targets types

This can be different for different kind of LB

instance-id(picks ip-address from the instance)ASG(AutoScalingGroup): This helps with the fact that you no longer now need to manually add/remove targets from the TargetGroup as application load increases/decreases. This happens via Amazon EC2 Auto Scaling, it automates that manual add/remove of instances from the target group by selecting anexiting LBandexiting TG

ip(from valid CIDR block)lambda

-

Mapping

N:Nmapping, N(TG):N(Targets)- There can be multiple TG

- A target can be associated with multiple TG

1:Nmapping, 1(LB):N(TG)- Each TG can be used with only one LB

- One LB can have multiple target groups

ALB

Troubleshoot/Info?

- Network Traffic Distribution – Elastic Load Balancing FAQs – Amazon Web Services

- Troubleshoot your Application Load Balancers - Elastic Load Balancing

- Amazon ELB in VPC - Stack Overflow

What is an ALB really?

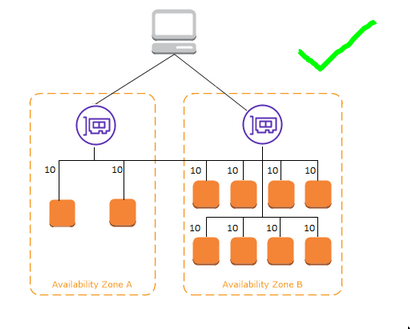

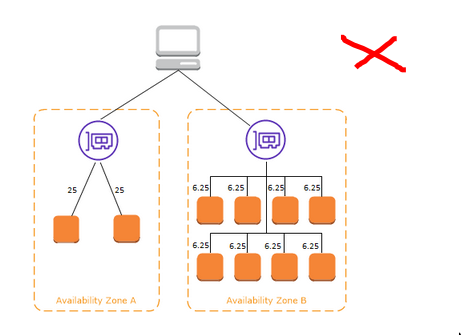

- 2 things. The definition of the

LB(LB object)and the actualprovisioned instances per AZ. - As an AWS user, you only ever directly deal with the LB object(configuring etc).

- Based on your configuration, it provisions the LB instances in respective AZ which do the actual load balancing.

- ALB instances serve targets in its AZ, or in cross-AZ/cross-VPC as-well if targets are there.

- AZ and TG for an LB need to be in same VPC

Process of ALB creation

- LB object => LB instances in AZ => LB instances in subnet => IP address assigned

- DNS for both internal and ALB is publicly resolvable. Useful when you connect via VPN.

- Both

internet-facingandinternalload balancers route requests to your targets using private IP addresses. Therefore, targets don’t need public IP to receive requests from an internal/internet-facing LB.

-

Internet facing ALB

- You define the LB object. (Two AZ)

- Since internet-facing, subnet that the AZ belong to will be

public(route to IGW) - It’ll provision LB instance in the two AZ

- Create EIP with a public IP. This IP can keep changing so refer to ALB by DNS name.

-

Internal ALB

- You define the LB object. (Two AZ)

- Since internal, subnet that the AZ belong to will be

private(no IGW) - It’ll provision LB instance in the two AZ

- A private IP will be assigned to the LB instance.

Placing an LB in a subnet and LB talking to targets

- You enable a AZ for a LB by placing the LB in that AZ, i.e placing the LB in one of the subnets of the AZ

- Once it’s placed in a subnet in an AZ, by virtue of VPC route tables, it’ll be able to talk to all private/public subnets wherever the targets are.

What about capturing real client IP?

See Capture client IP addresses in the web server logs behind an ELB & HTTP

How does ALB talk to clients(users) and targets?

- See How ELB works doc

- Could use multiple HTTP based protocols for client communication, uses HTTP1.1 for target communcation

- Both

internet-facingandinternalLB route requests to targets usingprivate IP addresses

Usecase of internal ALB?

Usecase of external ALB is apparent, but folliwing are some usecases for internal ALB

- Multi tier

internet-facing LB <-> Web-server(s)internal LB <-> Application-server(s)- Final:

internet-facing LB <-> Web-server(s) <-> internal LB <-> Application-server(s) - The web-server(s) can be looking up a DNS record which would point to the internal LB and then it can use Host headers etc.

- Giving access to internal services in a VPC

- Suppose you want to host grafana/some other internal service in the VPC

- People can use some kind of VPN to access the VPC

- The internal LB can be handy here trying to give access to the various internal services to people using the VPN

- Service Discovery (See Infrastructure)

- While AWS offers various ways to do service discovery, having an internal LB can also be a way to do the same.

- Cloudmap, ECS Service Connect, AppMesh, etc etc.

service A -> internal LB -> service B

- While AWS offers various ways to do service discovery, having an internal LB can also be a way to do the same.

Connection establishment vs Traffic flow in ALB

-

Connection Establishment

Following diagrams initially confused me, I confused direction of connection establishment to traffic flow.

-

Traffic flow

- Traffic:

IGW <-> (ALB, Public subnet) <-> (Web Server, Private subnet)- Reply traffic from the webserver will go though the ELB. It does not need a NAT.

- ALB acts as a TCP proxy here, establish a new TCP connection to the back-end server.

- Webserver will see incoming traffic from ALB’s IP

- Only outgoing traffic purely originating from instances(Eg.

apt update) in the private subnet need to go though the NAT gateway. - See Load balancer subnets and routing (Traffic flow)

- See Receiving inbound connections from the internet

- Traffic:

Benefits of Cross-AZ load-balancing?

For ALB, two AZ and cross AZ LB must be enabled

Does ALB has to do anything with NAT Gateway?

- No. Both can operate independently as per need.

- Usecase of both is different

- Reply traffic goes though the ALB only, no NAT needed. Check the traffic flow question.

TODO Security in AWS

- Well, it’s just an AWS Account ID!

- The AWS Security Reference Architecture - AWS Prescriptive Guidance

- HIPAA on AWS—Solution

Certificates

- ACM certificate by region

SG

- security groups can be used to control networking access by only allowing inbound traffic from other known security groups that you add to the allow list. (Verify??)

NACL vs Security Groups

If we want defense in depth, we want to be using both

| NACL | SG | |

|---|---|---|

| Stateful? | Stateless (Return traffic needs to be explicitly allowed) | Stateful |

| Rules | Both allow and Deny | Allow only |

| Association | Subnets(1:N mapping, 1(NACL):N(subnet)) | Network Interface, Instance(EC2) |

Also see

- Security groups for your Application Load Balancer - Elastic Load Balancing

- https://www.reddit.com/r/aws/comments/8d5bdy/in_my_company_all_application_servers_for/

Storing application secrets

Services

EC2

Security Groupsare the Firewalls of EC2

ECS

- A Container orchestration tool/layer

- We can have multiple ECS clusters

- Alternative: Kubernetes, Docker Swarm

- Is free, You pay for the underlying host(ec2/fargate)

- ECS will allow you to deploy a wide variety of containers on a number of instances and automatically handles the complexity of container placement and lifecycle for you. This is useful compared to using docker-compose on your ec2 instance.

- Handles Services&Task Definitions

Additional notes

- ECS can integrate with other AWS services like load balancers, but they leave that completely up to you. It’ll just orchestrate your stuff. Unlike EBS it’ll not put a loadbalancer etc for you.

- Passing runtime configurations for images as it doesn’t have ConfigMaps like Kubernetes

- SSM parameters like this image

- init container + s3

Flavors

- ECS on EC2

- EC2 instance need to install the ECS agent/AMI

- Resources in EC2 might be over-provisioned if the container does not use most of it

- You can also have it manage ASGs of your own EC2 instances

- ECS on Fargate

- Managed

- If we configure properly only the resources needed for the container will be used.

- Uses Firecracker (See Virtualization, Containers, OCI Ecosystem)

Elastic Beanstalk (EBS)

- Beanstalk is a Platform as a Service (PaaS) offering

- Puts the application in a autoscaling group by default?

- Also will deploy a load-balancer.

- It spins up a few opinionated services under the covers for you in an attempt to make deploying your application easier. (may not be desirable)

- It’s like heroku from AWS

Lightsail

See Compare Amazon EC2 and Amazon Lightsail | AWS re:Post

- Easiest way to run a virtual machine on AWS.

- Good if you simply want to host simple one-off webapps/custom code etc.

- Has restrictions on features and capabilities versus ec2.

- Has a lightsail specific loadbalancer

- No autoscaling

- Network

- Managed by AWS

- No concept of private subnet

- w EC2 user gets to configure the VPC.

Lightsail container services

- This is priced different offering than lightsail instances but falls under the lightsail umbrella.

- It’s very simplified container deployment, image url and port, that’s all.

- Under the hood, ECS power lightsail container services.

AppRunner

Cloudfront

CDN

API gateway (AGW)

- See CORS, alternative could be selfhosting something like Kong

- API gateway’s primary purpose is rule based routing.

- Once you get beyond a low number of requests, it becomes a lot more expensive than an ALB,

- Features

- Rate Limiting

- Authorizers

- B/G Deployments

CDN/Cloudfront infront of API Gateway?

Useful for couple of reasons:

- Caching: Even if API gateway may have its own caching, could be expensive

- Clients will connect to the nearest point of contact

- HTTPS (TLS) negotiations happens in the nearest point reducing latency

ACM (AWS Certificate Manager)

Spot Instances

All of these can either run on base AMI or user data. Having a loaded ami will obv. boot faster.

- Allows running fault-tolerant workloads at a much cheaper price

- You need to specify a “fleet”, a stronger fleet is a more combination of machines. (Eg. If you need fruits, you specify apple, mango and banana in the fleet, this way you maximize the probability of getting a fruit. Eg. if apple isn’t available you’ll get the banan)

Creating Spot instance from UI & Terraform

- w UI

- Request an instance fleet

- Make sure the AMI, region and security groups are set in a template manner + desired os + deps, so we have a ready-to-go machine as soon as the spot request is fulfilled.

- In my case, the spot instance will automatically join a Tailscale network and register itself as a Nomad client, this way workfloads waiting to be running can simply start running here.

- w

tf:ec2_instace- This has some spot configurations but not enough for fleet and maintenance

- so if we need spot and we want to self heal, we’d need to go with ASG.

- Quite limited

Spot instances w EC2 Fleet

Caution:

- tf:aws_spot_fleet_request and tf:aws_spot_instance_request both are deprecated.

- Instead use the

ec2andasgresources which have ways to specify spot related config.see Everything you need to know about autoscaling spot instances | Spot.io

- We can create spot instances for one-time use easily, but usually it is the case that we want to “maintain” spot instances over time, i.e if a spot instance gets taken down we should automatically make an effort to get another spot instance up asap.

- For this, AWS gives us 2 options as described here

- EC2 Fleet (This is the recommend way to “maintain” spot instances)

- Spot Fleet (This is accessible from the AWS Console UI & there’s a deprecated Terraform module)

- This basically just create EC2 spot request with “maintenance” config based on the launch template

Hence, we use tf:EC2 Fleet

# NOTE: It takes a long time to delete/destroy aws_ec2_fleet resource for some

# reason

resource "aws_ec2_fleet" "big_machine_spot_fleet" {

type = "maintain"

# NOTE: The architecture of launched machines depend on the AMI. i.e Instances

# will be launched with a compatible CPU architecture based on the Amazon

# Machine Image (AMI) that you specify in your launch template.

launch_template_config {

launch_template_specification {

launch_template_id = aws_launch_template.big_machine_template.id

version = aws_launch_template.big_machine_template.latest_version

}

override {

subnet_id = aws_subnet.bongstars-public.id

instance_requirements {

allowed_instance_types = ["r*", "c*", "t3*"]

local_storage = "excluded" # we prefer ebs only machines

memory_mib {

min = 1000

max = 5000

}

vcpu_count {

min = 1

max = 2

}

}

}

}

excess_capacity_termination_policy = "termination"

replace_unhealthy_instances = true # default is false

terminate_instances = true # delete instances if fleet is deleted

terminate_instances_with_expiration = true

spot_options {

allocation_strategy = "price-capacity-optimized"

instance_interruption_behavior = "terminate"

maintenance_strategies {

capacity_rebalance {

replacement_strategy = "launch-before-terminate" # undocumented in docs

termination_delay = 121 # undocumented docs, need to be greater than 120

# replacement_strategy = "launch"

}

}

}

target_capacity_specification {

default_target_capacity_type = "spot"

total_target_capacity = 1

}

}Spot instances w Autoscaling group

- This is similar to EC2 Fleet in terms of usage but I feel this is the most pragmatic way to do it.

- Other benefits include fitting better into ASG ecosystem, loadbalancers and such and being able to scale up and down rather than have to deal with specific of how “termination” works in ec2 fleets.

- ASG is basically nice wrapper to use when having to deal w EC2 fleets. Each instance is made as a

instancerequest unlikefleetrequests. But that’s implementation detail. (Eg. thepersistentinspot-requestwill show as one-time but if by any chance it dies, the ASG takes the responsibility to spin up things)

“AWS Auto Scaling is a a Service on its own. EC2 fleet is a feature of EC2. You can also configure EC2 Fleet functionality to work with Auto Scaling.”

- EC2 fleet allows you to simplify the provision of a “group” of instances.

- Auto Scaling allows you to provision, monitor and scale instances depending on the configuration you set for the group.

- You can use EC2 Fleet from ASG.

- Amazon EC2 Fleet Functionality is Now Available via EC2 Auto Scaling in the AWS GovCloud (US-West) Region

Autoscaling

- Instances launched with a ASG won’t have a

Nametag however

This is in terms of Nomad, it basically has a aws autoscaling plugin when it sees(via Prometheus metrics) that things are queued up it can trigger aws via that plugin and increase the target count of the ASG and when it runs the nomad jobs will automatically gets placed there and so on.

Build on top on AMI everytime

I don’t like this way much, it’s both sort of unmaintainable and takes a lot of time to spin up everytime as it builds everytime

-

Terraform

resource "aws_launch_template" "poopmachine" { name_prefix = "poopmachine" image_id = data.aws_ami.nixos24_05.id instance_type = "r5a.xlarge" # 32G, 4cpu, amd key_name = aws_key_pair.geekodour_laptop_key.key_name vpc_security_group_ids = [aws_security_group.bouncer.id] # NOTE: This will build the packages on-every machine boot/re-boot. So this # might make the time for things to be up a little slow, if we want to # avoid this delay, we'd need to build custom AMIs # TODO: We should do this(build custom AMI) ASAP as even on r5a.xlarge, it's # taking ~10m just to install deps as in nix, it'll build the binaries # on the machine. # TODO: Missing telementry data! # Telemetry config files and logs are also not getting sent to grafana # cloud for the same reason. We need some way to bake in that config. # Doing it with AMIs will be simpler. user_data = base64encode(templatefile( "${path.module}/templates/batch_nomad_client.nix.tpl", { node_class = "batch-runner" datacenter = "development" })) block_device_mappings { # see https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/device_naming.html device_name = "/dev/xvda" ebs { volume_size = 20 volume_type = "gp3" } } iam_instance_profile { name = aws_iam_instance_profile.ops_profile.name } lifecycle { ignore_changes = [image_id] } } resource "aws_autoscaling_group" "poopmachine" { name = "poopmachine" min_size = 0 max_size = 1 desired_capacity = 0 # NOTE: we're simply using the default vpc subnet, if we put this in a private # subnet, we'll have to setup NAT gateway/instance for internet access. vpc_zone_identifier = ["subnet-abcd"] launch_template { id = aws_launch_template.poopmachine.id } # NOTE: nomad adds some tags, we don't want to manage that ourselves. It's # harmless to ignore those. lifecycle { ignore_changes = [tag] } }

-

Template

{ modulesPath, config, lib, pkgs, ... }: { imports = [ (modulesPath + "/installer/scan/not-detected.nix") (modulesPath + "/virtualisation/amazon-image.nix") ]; nix.settings.experimental-features = [ "nix-command" "flakes" ]; nixpkgs.config.allowUnfree = true; # We keep this access even if we have tailscale ssh for any case tailscale # daemon stops working users.users.root.openssh.authorizedKeys.keys = [ "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIEgtcOFP7ZLkmkqpZhXf5YZ1+kFw9YEyYtyVpsRm0RgC" # geekodour pc ]; users.users.geekodour = { isNormalUser = true; extraGroups = [ "wheel" "input" "docker" ]; # Enable 'sudo' for the user shell = pkgs.fish; openssh.authorizedKeys.keys = [ "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIEgtcOFP7ZLkmkqpZhXf5YZ1+kFw9YEyYtyVpsRm0RgC" # geekodour pc ]; }; programs.fish.enable = true; services.tailscale = { enable = true; package = pkgs.tailscale; interfaceName = "tailscale0"; }; # oneshot job to authenticate to tailscale systemd.services.tailscale-autoconnect = { description = "Automatic connection to Tailscale"; # make sure tailscale is running before trying to connect to tailscale after = [ "network-pre.target" "tailscale.service" ]; wants = [ "network-pre.target" "tailscale.service" ]; wantedBy = [ "multi-user.target" ]; # set this service as a oneshot job serviceConfig.Type = "oneshot"; # have the job run this shell script script = with pkgs; '' # wait for tailscaled to settle sleep 2 # check if we are already authenticated to tailscale status="$($${tailscale}/bin/tailscale status -json | $${jq}/bin/jq -r .BackendState)" if [ $status = "Running" ]; then # if so, then do nothing exit 0 fi # otherwise authenticate with tailscale # https://login.tailscale.com/admin/settings/keys # Usually you'd pick a single use key from the UI and it should work # NOTE: This key can be stored in AWS parameter store $${tailscale}/bin/tailscale up --ssh -authkey <CUSTOMKEY> ''; }; # see https://developer.hashicorp.com/nomad/docs/install # NOTE: These are enabled by default in nixos, adding this for extra # confirmation boot.kernel.sysctl = { "net.bridge.bridge-nf-call-arptables" = 1; "net.bridge.bridge-nf-call-ip6tables" = 1; "net.bridge.bridge-nf-call-iptables" = 1; }; systemd.services.nomad = { after = [ "tailscale-autoconnect.service" ]; }; services.nomad = { enable = true; enableDocker = true; package = pkgs.nomad_1_7; # see (amazon-ecr-credential-helper non-login shell) https://github.com/awslabs/amazon-ecr-credential-helper/issues/161 extraPackages = with pkgs; [ cni-plugins amazon-ecr-credential-helper getent ]; dropPrivileges = false; # run as root to access sockets # This config goes to /etc/nomad.json on the nixos host settings = { bind_addr = '' GetInterfaceIP "$${config.services.tailscale.interfaceName}" ''; datacenter = "${datacenter}"; data_dir = "/var/lib/nomad/"; client = { node_class = "${node_class}"; # to be used with affinity enabled = true; servers = ["HOSTNAMEOFSERVER"]; # since we're using tailscale, magicdns would work # NOTE: This would ideally be detected by the client agent # But there's some issue with arm machines # See https://github.com/hashicorp/nomad/issues/18272 # cpu_total_compute = 2000; cni_path = "$${pkgs.cni-plugins}/bin"; # see https://github.com/hashicorp/nomad/issues/6536 host_volume = {}; host_network = { tailscale = { interface = "tailscale0"; }; loopback = { interface = "lo"; }; }; }; limits = { # NOTE: since we'll be accessing nomad through trusted sources we don't want # any limitations, faced issues when clicking on too many things in the # nomad ui http_max_conns_per_client = 0; }; # nomad sd for prometheus # https://prometheus.io/docs/prometheus/latest/configuration/configuration/#nomad_sd_config # relabel viz: https://relabeler.promlabs.com/ telemetry = { publish_allocation_metrics = true; publish_node_metrics = true; disable_hostname = true; prometheus_metrics = true; collection_interval = "30s"; # should be <= or prometheus scrape interval }; # ecr support for docker # 1. IAM role to be assumed by ec2 instance # 2. Needs docker-credential-ecr-login to be installed on the machine # echo "<acc>.dkr.ecr.<region>.amazonaws.com/<repo>" | docker-credential-ecr-login get # see https://github.com/hashicorp/nomad/issues/20630 # see https://discuss.hashicorp.com/t/what-is-the-best-way-to-configure-nomad-to-pull-from-ecr-private-registries/20939/7 # see (json parse) https://github.com/hashicorp/nomad/issues/7981 # NOTE: If other public images start failing, we'd need to use "auth_soft_fail" plugin = [{ docker = [{ config = [{ extra_labels = ["*"]; logging = [{ type = "journald"; config = [{ labels-regex = "com\\.hashicorp\\.nomad.*"; }]; }]; auth = [{ # NOTE: We're not specifying the config directly, so no # additional handling of docker config is required # NOTE: If we needed to use config, we'd do it something like: # config = "/etc/docker/config.json"; helper = "ecr-login"; }]; }]; }]; }]; }; }; environment.systemPackages = with pkgs; [ # base curl git neovim # utils htop ripgrep jq # ops # NOTE: Need dmidecode to get cpu/mem stats in cloud vms # usage: sudo dmidecode -t 4 # usage: nomad node status -verbose -self | rg cpu dmidecode ]; services.openssh = { enable = true; settings.PasswordAuthentication = false; }; virtualisation.docker.enable = true; virtualisation.docker.rootless = { enable = false; setSocketVariable = false; }; nix.gc = { automatic = true; dates = "weekly"; options = "--delete-older-than 30d"; }; # docker aws combination # NOTE: This bugger had me going nuts because aws metadata services run in 169.254.169.254 # see https://forums.docker.com/t/how-to-prevent-docker-from-creating-virtual-interface-on-wrong-private-network/124552/7 # see https://github.com/NixOS/nixpkgs/issues/109389 networking.dhcpcd.denyInterfaces = [ "veth*" ]; networking.firewall = { enable = true; allowedTCPPorts = [ 22 443 80 ]; # NOTE: # We run caddy as container, it runs as a reverse proxy to other services # and services running on the main host as-well. For this reason we need to # allow traffic from the containers to access our host network extraCommands = '' iptables -A INPUT -i docker0 -j ACCEPT ''; }; system.stateVersion = "24.05"; }

TODO Butild custom AMI (todo)

Storage

Storage FAQ

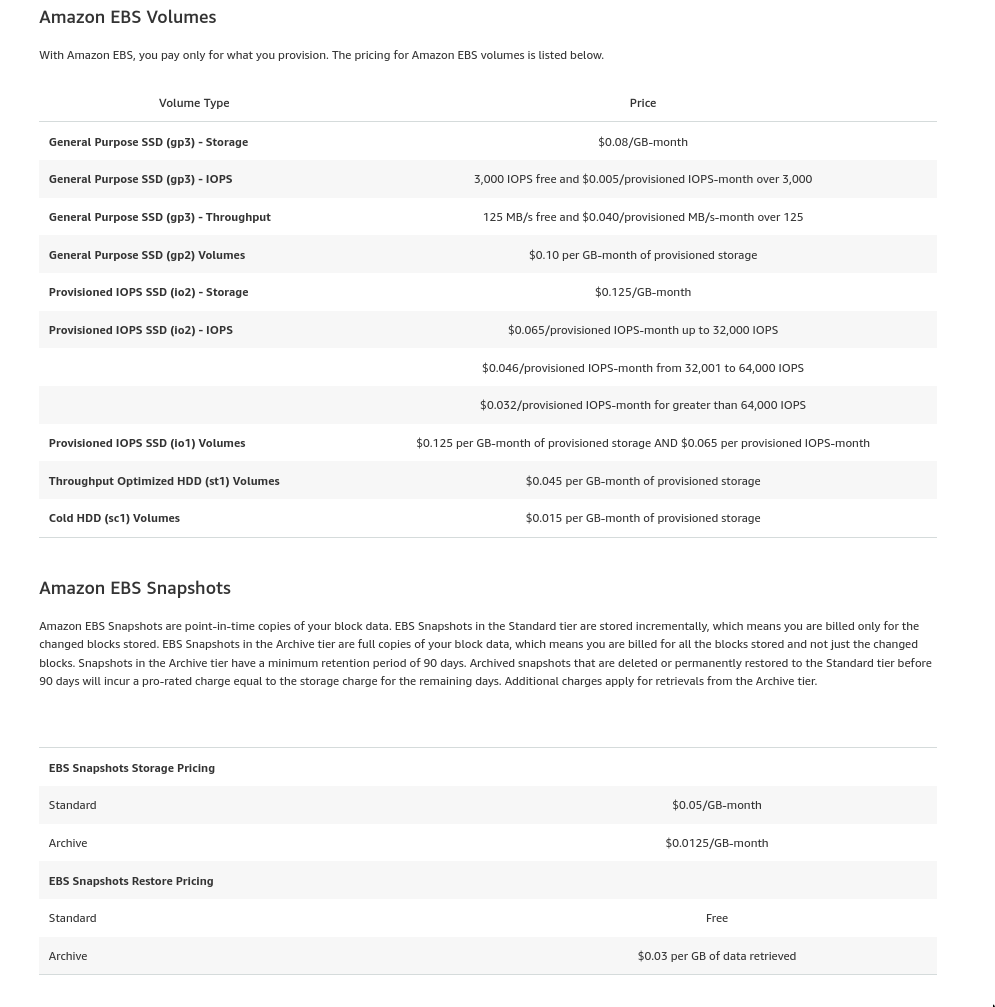

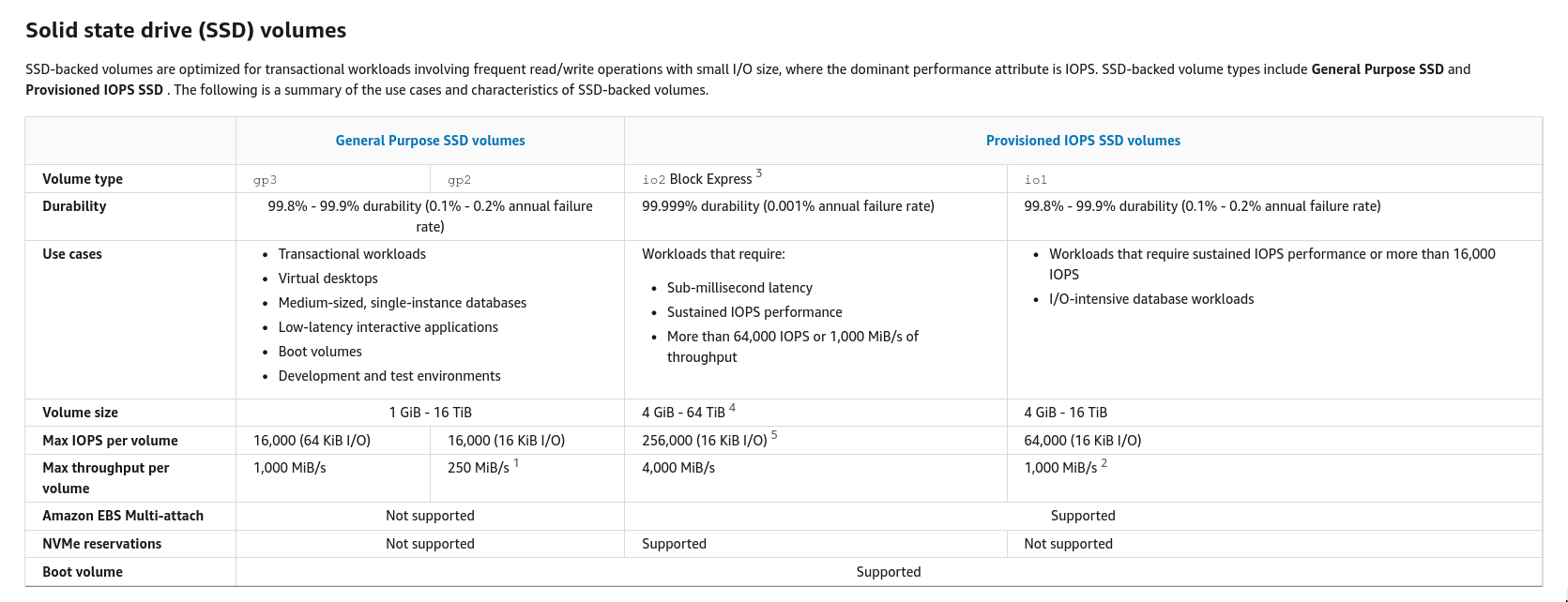

Do all EBS volumes can go with all EC2 instances?

- No

- Instances also have certain IOPS limitations, you want to use only those instances that are compitable with the EBS volume you’re planning to wire it up with

IOPS

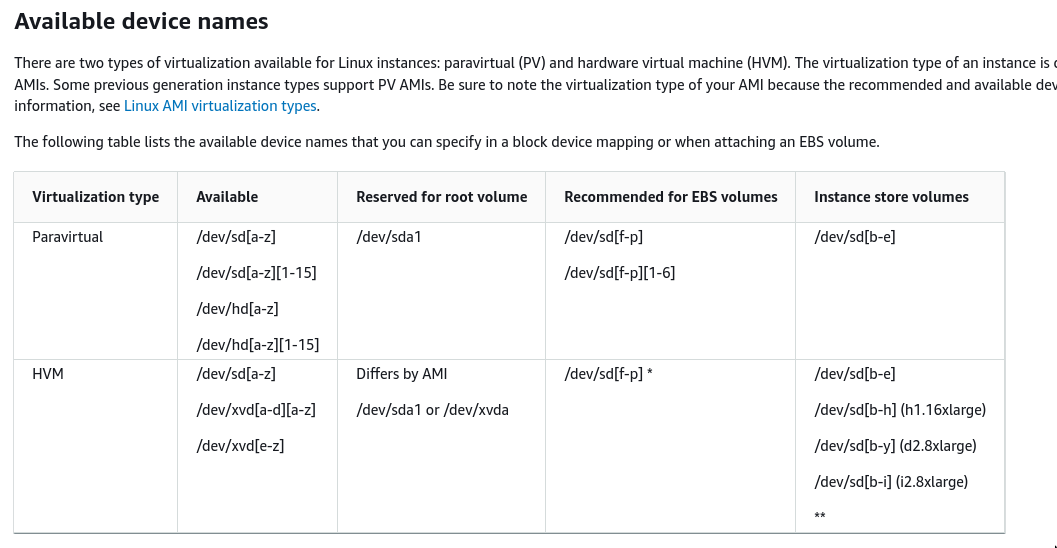

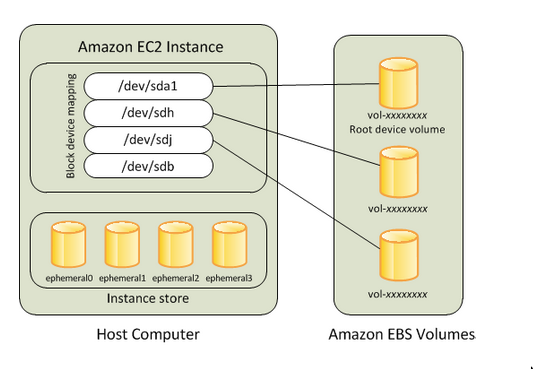

Voluming naming in EBS and EC2 instances

- Different vendors handle the mount point differently, eg. in AWS it’ll show as mounting to

/dev/{s}db//dev/sdhbut instead will be mounted to{xv}dbor{xv}dh- This actually depends on the AMI used^

- Device names on Linux instances - Amazon Elastic Compute Cloud

Multi attach EBS?

- Not all volume types allow multi-attach

What is a EBS volume really?

A single EBS volume is already made up of multiple physical disks running in an AWS data center. EBS volumes are about 20x more reliable than a traditional disk. If that’s not enough, you can snapshot your EBS volumes to S3, which is designed for 99.999999999% durability. You can create new EBS volumes from these snapshots.

- reddit user

Elastic Block Storage (EBS) vs Elastic File System (EFS)

We can Can we shrink EBS?

- No

- Is there a good way to “shrink” EBS volume? : aws

- We can def grow

EBS Latency

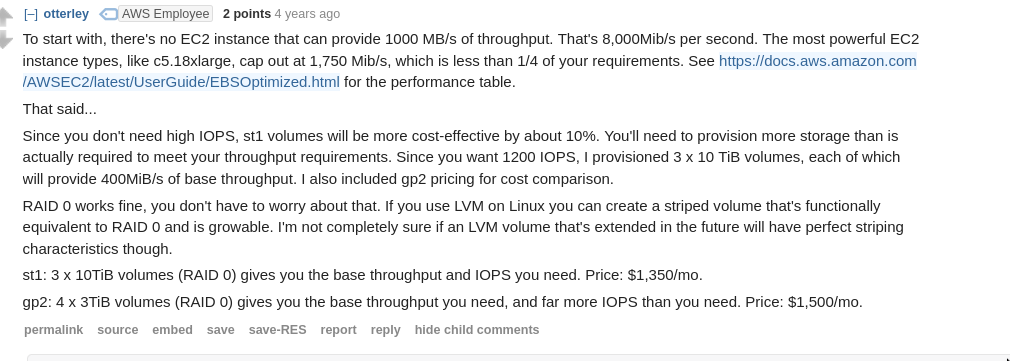

EBS & Snapshot Cost

- Snapshots against the same volume are incremental, so there’s not much added cost

EBS (Elastic Block Storage)

What types of volumes do we have? (conceptual, Tactical Knowledge)

- There’s root volume and there’s data volume(s)

- Both can be EBS

- If you decide to use LVM (Logical Volume Manager), then you’d

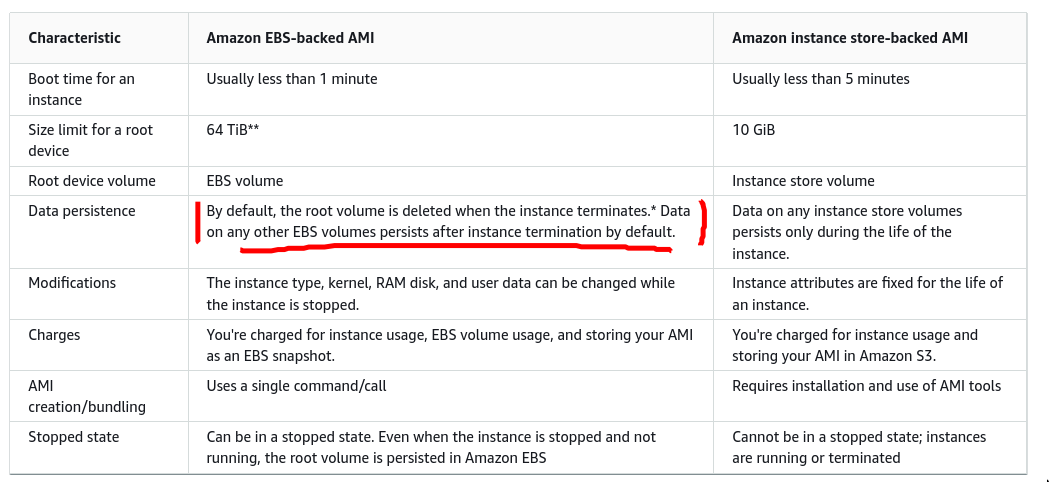

Root volume type (for AMI)

- When we create new instances we can specify the

ami, the ami would have aroot-volume-typeroot-volume-typecan be:ebsorinstance store- AWS recommends using

ebsbsed ami - By default when you use

ebsroot volume type, it’ll the thesnapshotprovided by theami. This is configurable at instance creation time.

- Root EBS store by default gets deleted if instance is destroyed(not stopped), Data EBS live as is by default even if the attached instance is destroyed. (configurable)

Growing EBS

- Start small! With EC2 instances, you pay per GB of disk (EBS volume) that you provision - so creating a big disk is a fat waste of $$$

- You can grow

2 ways- Increase no of EBS volume

- Limit on how many EBS can be attached to a EC2: What is the max number of attachable volumes per Amazon EC2 instance?

- Increase the size of the volume itself

- Limit on max size of each EBS volume: 64TB

- Increase no of EBS volume

Process of Growing EBS

- From CLI/Terraform modify the target size/any other modification to the EBS Volume

- After modifying a volume, you must wait at least six hours and ensure that the volume is in the

in-useoravailablestate before you can modify the same volume.- volume states:

creating, available, in-use, deleting, deleted, anderror - modification states:

modifying, optimizing, andcompleted

- volume states:

- After the EBS volume is resized, and is in

optimizingorcompletedstate, we:- Need to extend the

partition - Need to extend the filesystem

- Need to extend the

- Now here is where having LVM (Logical Volume Manager) in your EC2 instance can help

- You can use vanilla linux ways to do it

- Or you can use LVM way of it to do it

- Expand EBS volume (via tf/cli/console)

- Grow Partition - growpart

- Physical Volume Resize - pvresize

- Logical Volume Resize - lvresize

- Resize File System - resize2fs

Snapshots

- A snapshot is a backup of an EBS volume

- EBS volume is the

virtual diskassociated with an EC2 instance

How/When to take snapshots?

- If the EC2 instance does not run a database

- Snapshots can be taken in a live system

- If the EC2 instance runs a database

- Snapshots should be taken only after the instance is stopped

- i.e You want to take the snapshot in a consistent state

Snapshots vs AMIs

- AMI is just a metadata wrapper around snapshots

- Usually you’d want either create AMI or snapshot but not both but this depends on the scenario

- Summary of the metadata

- There can be one or more snapshots (root volume, any additional ebs volumes).

- Launch perms for the ami

- And block device mappings for the ebs volumes.

Snapshots vs Backups

We do snapshots every night and delete the ones that are older than 35 days. Taking a snapshot is a lot faster depending on what the differential is between the previous snapshots. For example, if there is a 1 TB change in data between from the previous snapshot to then one you’ll be creating, then it may take a while to get created. We’ve seen this a couple of times in our production environment, so it all depends on what’s changing on the volume.

- Having snapshots is not a sure-shot backup strategy

- If in any case, snapshots fails, you have another completely unrelated backup method ready to go etc.

Cloudwatch

Period

Period is a query level thing

- Metrics stored with

- Standard resolution: Can be retrieved with

periodof multiple of 60s - High resolution: Can be retrieved with

periodof 1s, 5s, 10s, 30s, multiple of 60s

- Standard resolution: Can be retrieved with

- If you try querying standard resolution metric(1m) with a

periodof 30s, you cannot get granularity at that level. You’ll get the same data as 1m. - On the other hand, you can query 1m resolution metrics at higher period like 5m. In the case of a 5 minute period, the single data point is placed at the beginning of the 5 minute time window. In the case of a 1 minute period, the single data point is placed at the 1 minute mark. So the graph for the same looks different.

- Higher granularity period + higher granularity resolution, Good for

- Analogy

- Resolution: photo resolution (Eg. mega pixels)

- Period: Zoom level on the photo when viewing it

- Dynamic time period when viewing graph: Panning on the image

Resolution

| Resolution Type | Granularity/Resolution | Example |

|---|---|---|

| Standard | 1m | AWS service metrics, Custom metrics |

| High Resolution | 1s (<60s) | Custom metrics |

Metric Type (Related to ingestion)

- Basic monitoring (Ingestion 5m)

- Detailed monitoring (Ingestion <5m)

Metric Retention & Rollups

| Resolution | Retention | Notes |

|---|---|---|

| <60s | 3h | high-resolution |

| 1m | 15d | |

| 5m | 63d | |

| 1h | 15mo |

- CW stores data of terminated instances as-well

- CW metrics have automatic roll up. i.e Data points that are initially published with a shorter period are aggregated together for long-term storage.

- Eg.

- We collecting data with 1m resolution

- Till 15d: 1m resolution, can retrieve with resolution of 1m

- After 15d: Aggregated, can retrieve only with resolution of 5m

- After 63d: Aggregated, can retrieve only with resolution of 1h

- Eg.

Links and Resources

AWS builder library

- Most relevant

- Semi relevant

- Less relevant

- Timeouts, retries and backoff with jitter

- Challenges with distributed systems

- Avoiding fallback in distributed systems

- Static stability using Availability Zones

- Caching challenges and strategies

- Automating safe, hands-off deployments

- Avoiding overload in distributed systems by putting the smaller service in control

- Making retries safe with idempotent APIs

- Minimizing correlated failures in distributed systems

- Using dependency isolation to contain concurrency overload

- Avoiding insurmountable queue backlogs

- Using load shedding to avoid overload