tags : Operating Systems, Systems, Database Locks, Lockfree

FAQ

What Structured concurrency?

- Structured concurrency is a higher-level pattern that helps in managing and controlling concurrent tasks in a structured manner. It’s a wrapper over what concurrency style we apply to give us better control of error handling, leaking resources, leaving tasks unfinished etc.

- Structured concurrency can be applied to any style, more of a meta thing.

How do concurrent processes communicate?

Who to do parallel?

- Some internet comments

- Shard your data per thread and treat your multithreaded (or multimachine) architecture as a tree, not a graph: where dataflow doesn’t need to pass between tree branches.

- Things eventually boil down to dividing work in a way that parallelizes nicely, exploits cache well, reduces the need to share information between threads, etc

- The more you come to understanding a problem in CS the more your code morphs from something general into becoming one big, bespoke data structure.

- This goes w my mental model of parallel programs being independent and so on.

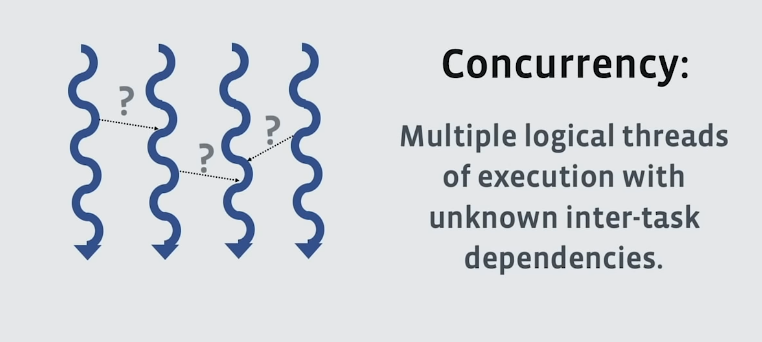

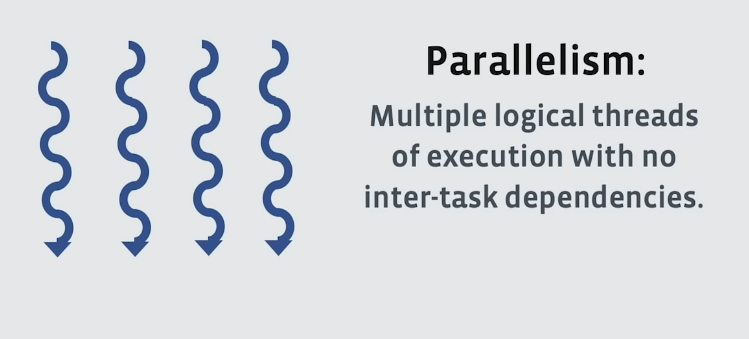

Concurrent V/S Parallel

The use of word “thread” is not very strict here.

- Parallelism is a subset of concurrency

- Concurrent is 1 queues and 1 coffee machine. Parallel is 2 queues and 2 coffee machines.

- Concurrency is juggling, parallelism is using two hands to do it

- Concurrency is what you use to make it correct, parallelism is to make it faster.

- In practice

- If you had

single coreandmultiple threads, those threads will be run concurrently. Eg. only one thread at a time, but no thread can assume any other thread’s current state. - If you have

multiple cores, it is possible to achieve parallelism by runningtwo threads at exactly the same time.

- If you had

- Example

- Concurrent: Internals of a web server

- Parallel: Google’s Mapreduce

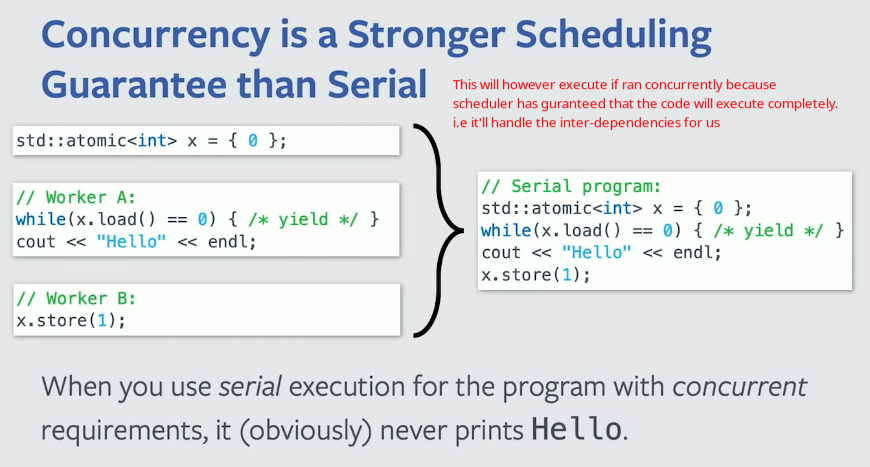

Concurrency

- Multiple things are in the middle of running, and they have to communicate/coordinate with each other in order to get the overall job done. This means, more work for the scheduler as things are dependent on order of execution.

- Concurrency is a way to organize code: pieces of your program get more flexible ways to communicate with each other than just call/return.

- Concurrency is important when you’re doing anything where the CPU has to wait. For example, network and disk heavy workloads.

- Part of the value of concurrency is that it can highlight opportunities for parallelism.

- If the processes are independent of each other you’re not guaranteed to benefit from concurrency, especially if you only have 1 core.

- Doing it wrong causes: Semantically wrong behavior (producing the wrong result, failing to produce a result at all).

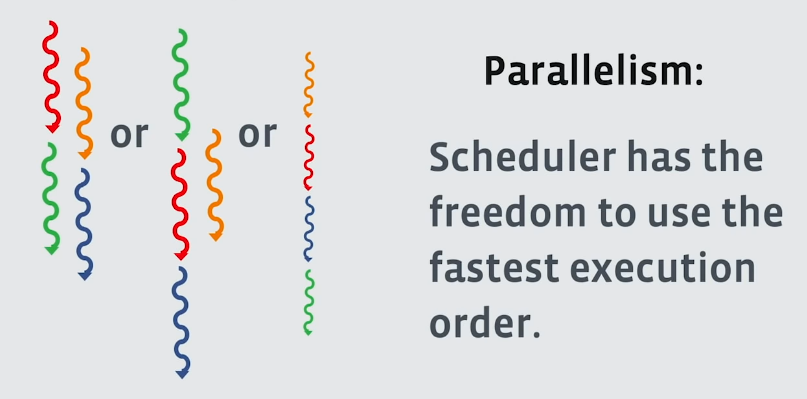

Parallelism

- Multiple things are in the middle of running, and several of them make progress simultaneously.

- Parallelism is a way to speed up code: pieces of your program don’t have to all wait their turn while one task at a time executes.

- If the processes are independent of each other:

- You can distribute those calculations to 8, 16 or 32+ cores and significantly cut down your runtime.

- It’s a promise by the user to the scheduler that the result of the code will be correct however it is scheduled to run.

- You can distribute those calculations to 8, 16 or 32+ cores and significantly cut down your runtime.

- Doing it wrong causes: inferior performance.

- We can use concurrent mechanism(eg. thread pools) to run parallel code but it’ll be slow. Because, now the user is not giving the scheduler the guarantee that there are no cross-task dependencies.

Serial

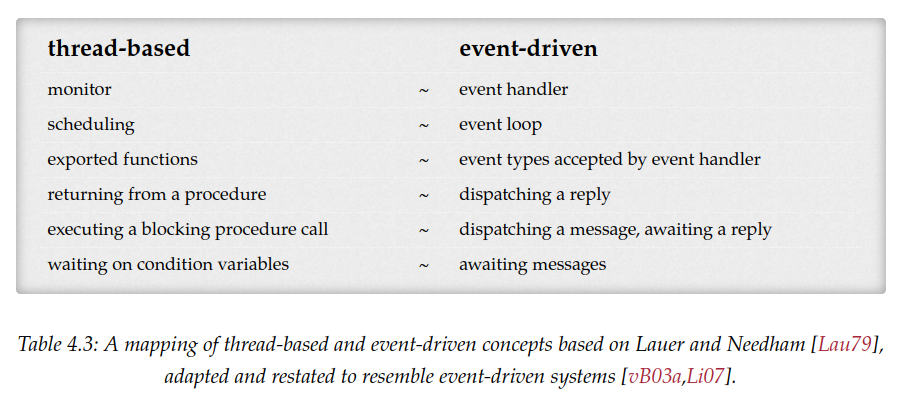

Concurrency Styles

This is not a strict list, just trying to categorize shit i see in the wild. The terminologies are so confusing. someone needs to make a feature matrix or something, maybe by language and make it the defacto thing when ppl argue about these things. These ideas can be using together, some might be othogonal etc.

Thread based

- See Threads and Coroutines

- Can share memory/ or not

- Uses things like locks, mutexes, countdowns, condition variables, semaphores etc.

- A sequential flow of control within a process.

Event Queue/Event driven

- One a single thread exists!

- A single loop reads from the event queue and invokes the handlers.

Actor Model

- See Actor Model

- No concept of “events”, “threads” or a “processes”, only Actors and messages.

CSP

- Communicating sequential processes - Wikipedia

- This is related to Trampolining

- Control is passed explicitly in the form of a

continuation(See Coroutines)

Instruction level

SIMD & MIMD

See Flynn’s Taxonomy

GPGPU

See GPGPU

Statement level based

- Language syntax based.

channelsandgroutinesin Golang.- Atomic operations in languages

Distributed

- Multiprocessor and Multi-computer stuff

Primitives and Issues

Blocking primitives

Mutex (Mutual Exclusion Lock)

- Mutex guarantees that checking or modifying the value of a semaphore can be done safely

- OS guarantees not to create a race condition between threads attempting to use the lock.

Semaphores + Mutex

lock := acquire_a_lock()

register := read_balance()

register += 100

write_balance(register)

release_lock()- +ve counter that can be used to synchronize multiple threads.

Spinlocks

Why would you consider spinlock?

- Acquiring one is a “busy” operation, i.e burns CPU cycles

- This makes sense if the context switch of sleeping mutex has an overhead which is more than the busy waiting action for the lock.

- Usually performs worse in high lock congestion situations. As spinlock will burn achieving nothing.

- Spinlocks are non-sleeping locks (busy waiting)

- Code waiting for a spinlock would spin in a loop until the lock becomes available.

- Despite their advantages, spinlocks couldn’t replace semaphores entirely due to their inability to sleep in the linux kernel.

- Also see Spinlocks Considered Harmful

- A close look at a spinlock (2021) | Hacker News

Condition Variables + Mutex

critical_section do

register := read_balance()

register += 100

write_balance(register)

end- Implement more complex conditions under which threads execute.

- Generally used to

- Avoid busy waiting while waiting for a resource to become available.

- Instead, implement a condition under which a thread executes

- Inversely, implement a condition under which the thread is blocked

Semaphores vs Condition Variables

- Semaphores and condition variable can be used together

- Condition variables has no counter/memory unlike semaphores.

- The shrinking role of semaphores {LWN.net}

Non-blocking primitives

Lock-free

- See Lockfree

Concurrency issues

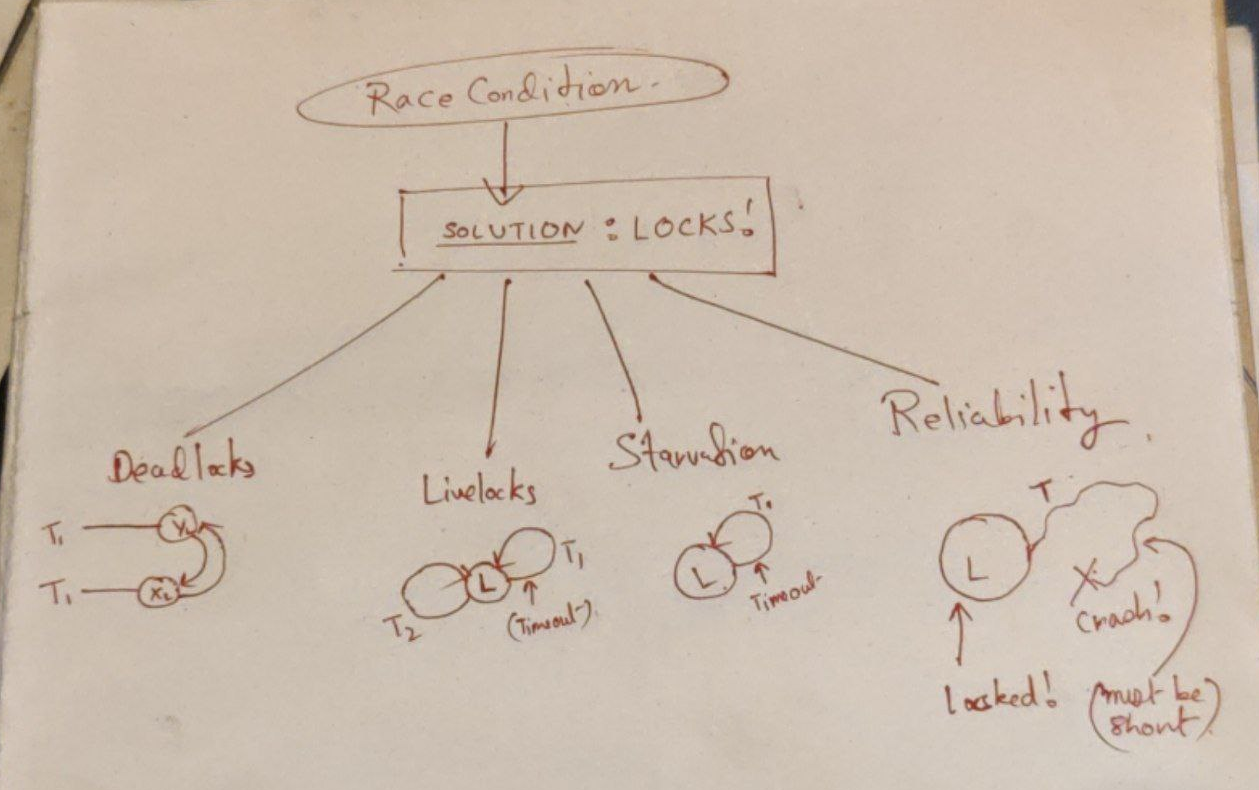

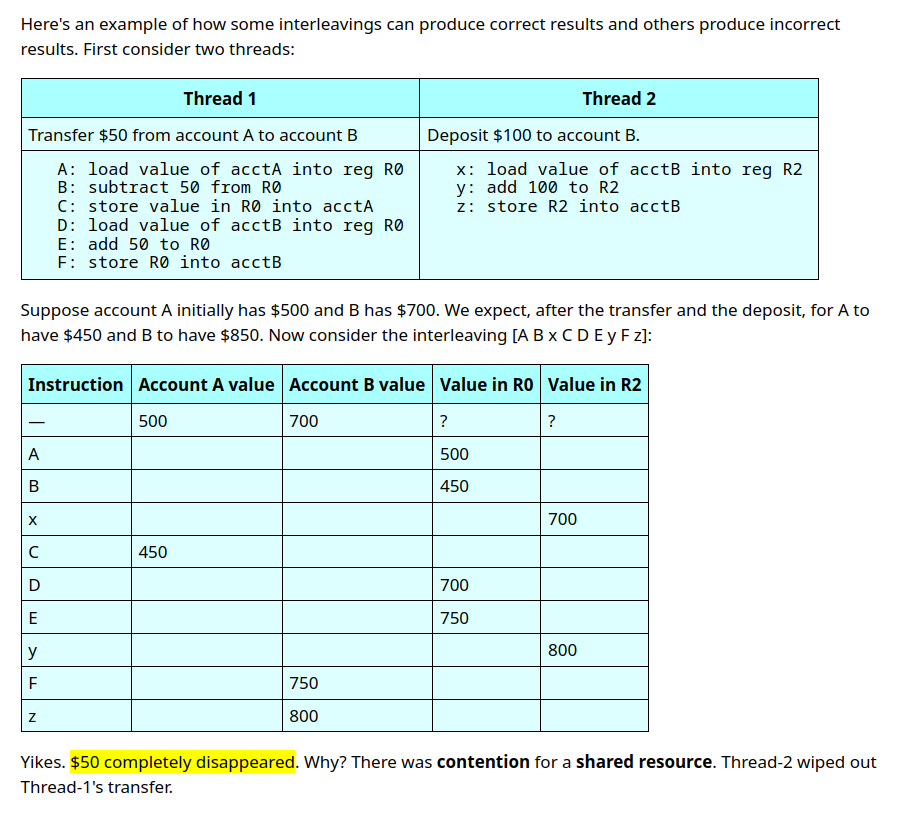

Race conditions

- Result depends on: Relative timing of events in the threads.

- Can exist on both single core and multicore systems.

-

Solution: Mutex Lock + Critical Section (atomic)

- Only 1 process deals w

critical sectionat a given time. - Critical section is basically chunking subsequences into atomic instruction

- Now this solves Race conditions, but causes other issues like

deadlock,livelock,starvation,reliability

- Only 1 process deals w

Deadlocks

- One or more threads are stuck waiting for something that never will occur. Something only the other thread which is also blocked can unblock.

TX 1 asks for A, gets lock A TX 2 asks for B, gets lock B TX 1 asks for B, blocks waiting for lock B (held by TX 2) TX 2 asks for A. Boom! - Can be solved via understanding the order and re-thinking.

Livelock

- No progress is made because

- Each thread tries to enter its critical section

- then times out, then tries again, and so on, forever.

- Can be solved via some jitter or something

- If deadlock is like playing on the beats, livelock is like playing on the offs. Either-way you end up in a situation where things are stuck.

Starvation

- Put upper bound on the time a process enters critical section while others wait.

Reliability 🌟

- Thread crashes in the middle of the critical section. What now?

- Thread also could be holding lock other processes wait for. Too bad now.

- It’s developer responsibility to make sure that critical sections are short and they terminate.

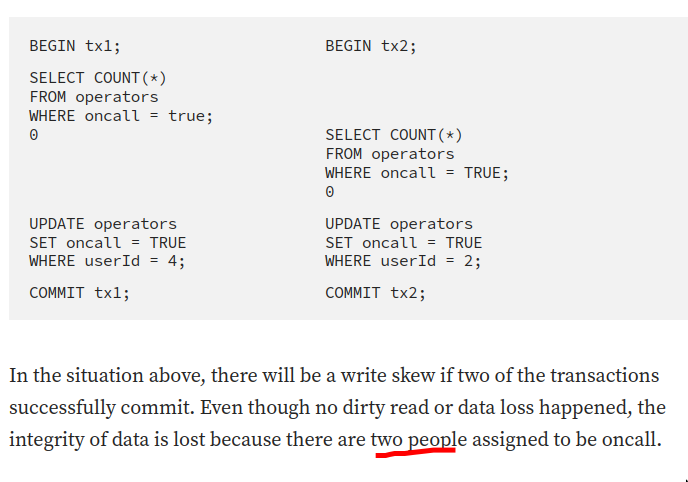

Anomalies

See the harmitage repo for list of it

- Dirty reads

- Data loss

- Write skews: Logical anomaly. Logical constraints on data is compromised. Eg. set one person to oncall.

Theoretical view of concurrency

Modeling concurrency

See Formal Methods

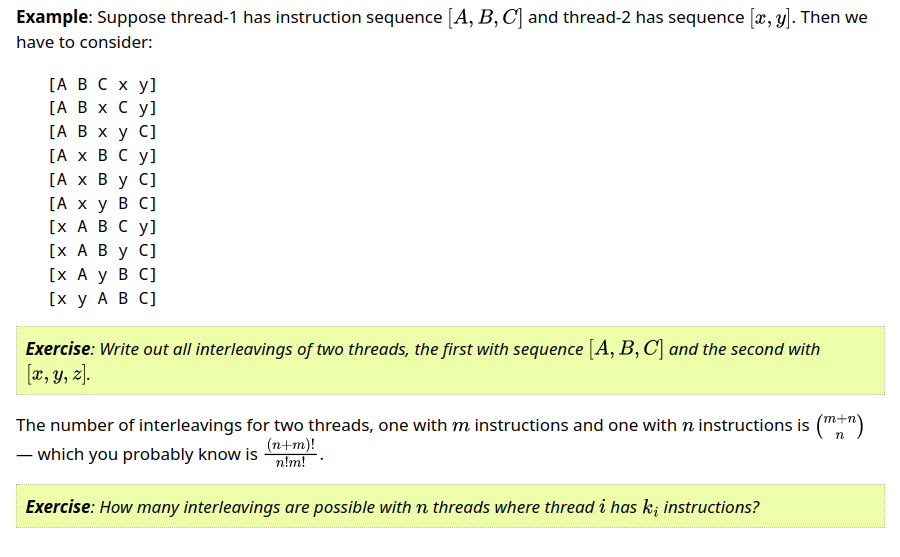

Non-realtime interleaved execution model

- Study of interleaved execution sequences of atomic instructions

- Each of the instructions

- Executes arbitrarily

- In finite amount of time.

- Can take many interleavings

- No assumption of

- Relative speed of instructions

- How the scheduler is working

Correctness

What Hoare said

-

Partial Correctness

if precondition & program terminates postconditions remain same (holds) end- If an answer is returned

- It will be correct

- This can be proven via Correctness criteria

- If an answer is returned

-

Total Correctness

if precondition terminate program & postconditions remain same (holds) end- If an answer is returned

- It will be correct

- It will return(terminate)

- Proving if a program will terminate is undecidable

- If an answer is returned

Correctness criteria

Properties of a program are mathematical statements we can make about it’s traces.

-

Safety properties (Always hold, BTNH)

- Bad things never happen

- Requires only one counter example to refute

- What to prove?

- Never does the wrong thing

- Never spits out the wrong answer

- Never violates invariants during execution

- Most of us have a natural intuition for safety properties as programmers.

- Example: Crash like things, Vulnerabilities, Memory errors etc.

-

Liveness properties (Eventually hold, GTAH)

- Good things always happen

- What to prove?

- A finite proof of progress resulting in the result we need

- Example: Guaranteed availability, and termination

Concurrency Consistency Models

See Concurrency Consistency Models

Reading and Writing in concurrent systems

- Type of access is fundamental

- Reads: Multiple threads can read data w/o any synchronization and conflicts

- Writes: Write access must be exclusive (that is, at most 1 thread at a time)

Mutex vs RWMutex

- Mutex

- Exclusive lock.

- Only one thread can read from the counter at one time

- RWMutex

- It’s

readers-writerlock. (readers is plural, writer is singular) - Semi exclusive

- If any reader holds the read lock, you cannot acquire write lock. If you request a write lock and readerlock > 0, future request read locks will be blocked till previously requested write lock is acquired and released. This is there to prevent starvation of write lock in read heavy programs.

- It’s

Scaling Reads and Writes

Scalability: How our algorithm performs when we give it more resources

Scheduling

- M processors and N threads. If M < N you need a scheduler

- In operation of a scheduler,

threadcan have manystates

By Language

Javascipt

- See Javascript Runtime and Browser

- The single-threaded model has made Node.js a popular choice for server-side programming due to its non-blocking IO, making handling a large number of database or file-system requests very performant.

- However, CPU-bound (computationally intensive) tasks that’s pure JavaScript will still block the main thread.

- To achieve real paralleling, you may need to use workers.

- A web worker or a cross-origin iframe has its own stack, heap, and message queue.

Golang

See Golang, Concurrency in Golang

Rust

- Rust offers safety from data races(not race conditions)

Python

- See Python and Python Concurrency